About 402.74 million terabytes of data are generated each day. This data is used for enhanced decision making and for gaining insights. The difficulty lies in the proper utilization of big data adjusted for current industry tendencies.

In this post, you’ll discover foundational concepts of big data, explore top big data trends for 2024-2028, and review specific applications of big data across industries. Besides, you’ll get to examine several real-life success stories of utilizing big data in businesses.

Foundational concepts in big data

Let’s get started by delving into the “5 Vs” of big data, which are volume, velocity, variety, veracity, and value:

#1. Volume is the vast amount of data generated every second from various sources, including social media, sensors, transactions, videos, and more.

Basically, everything to the sheer mass of big data that a company can access.

#2. Velocity is the speed at which new data is generated and the pace at which it must be processed to meet demand.

It happens that data is generated and gathered in real-time while processed in batches at certain periods or vice versa. The slowest process determines the end velocity.

#3. Variety is the different types of data, i.e., structured, semi-structured, or unstructured data, and the variability of sources, like text, images, videos, sensor data, and more.

In most scenarios, greater variety is better overall. Yet, it increases the overall complexity of any big data solution and raises standardization and unification issues.

#4. Veracity is the quality, reliability, and trustworthiness of data.

High veracity data has fewer inaccuracies and inconsistencies, making it more suitable for analysis.

#5. Value is the potential economic and business benefit that can be derived from data.

When analysis of particular data can contribute the most to extracting insights and advance business objectives, this data is regarded as high-value. Value is the most crucial V as it drives the decision to invest in big data technologies and analytics.

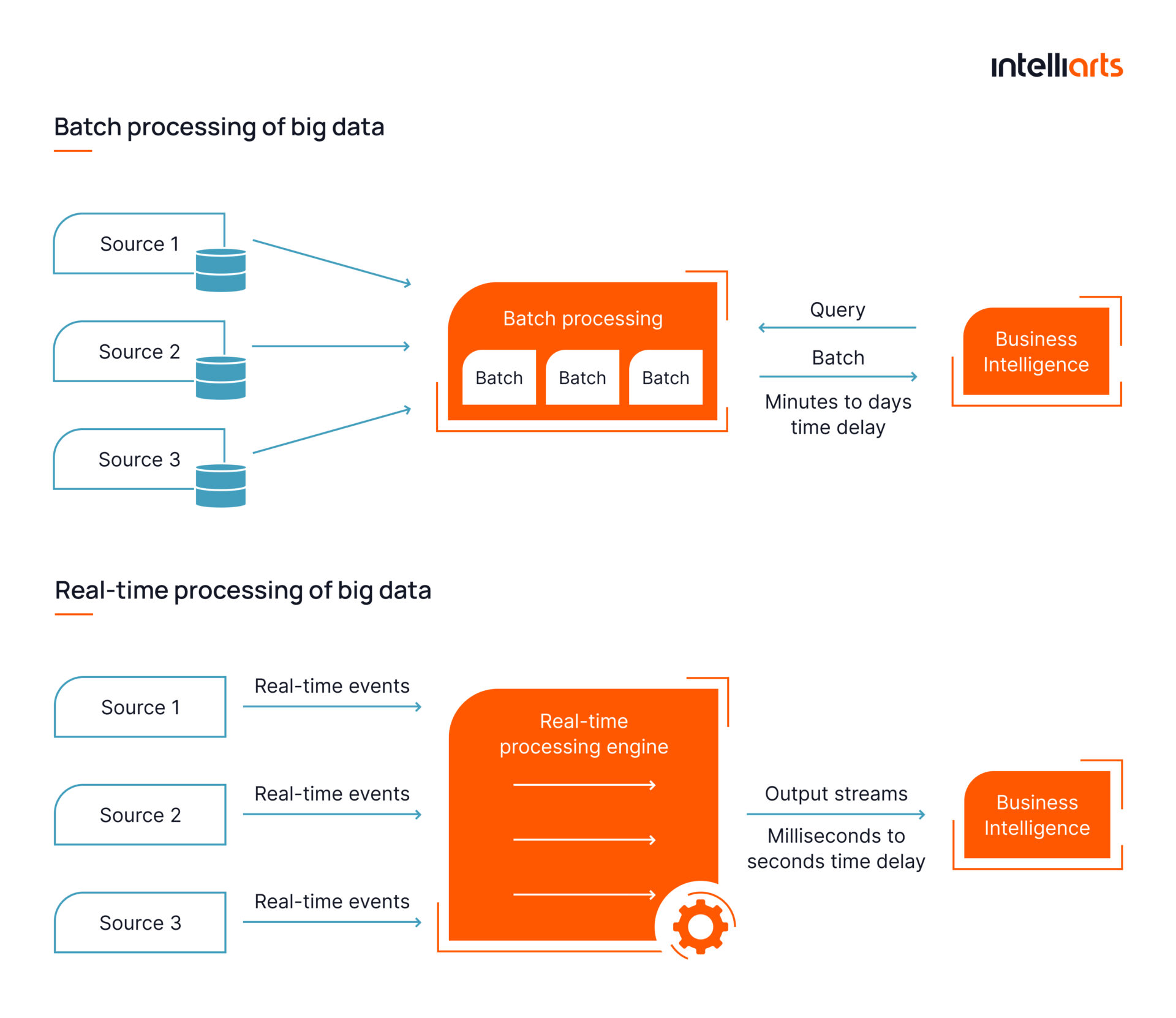

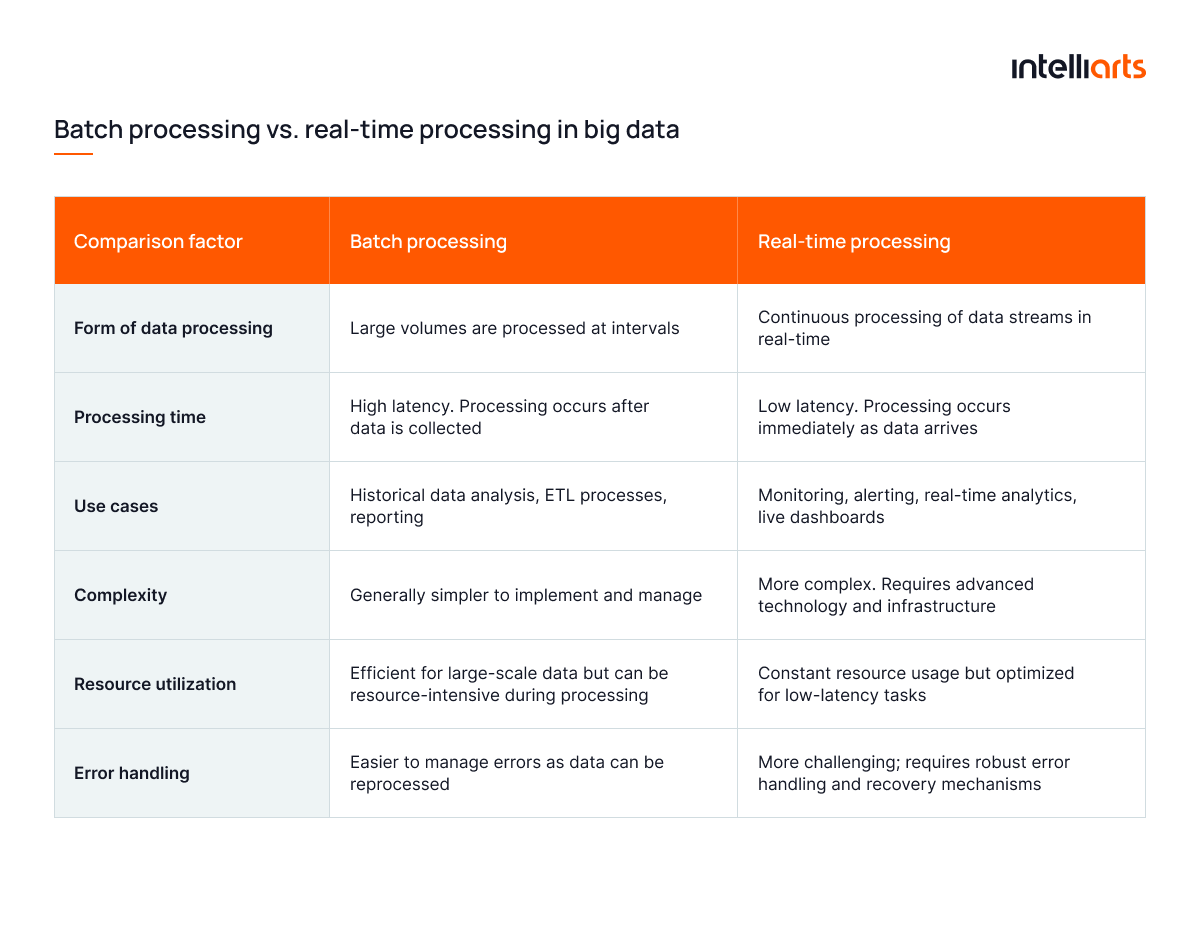

Aside from the five Vs, there are also concepts of batch and real-time processing of data:

Batch processing is collecting and processing large volumes of data at scheduled intervals or specific times.

The main characteristic of batch processing is that the data is accumulated over a period in this case, stored, and then processed in a single batch.

Real-time processing is the continuous input, processing, and output of data.

With real-time processing, handling of data and moving it across the data pipeline occurs almost instantly when the data is received, allowing for immediate analysis and action.

You can discover the key differences between batch and real-time processing in the table below:

Top trends in big data for 2025

Big data continues to evolve, shaping the way businesses make decisions and innovate. The latest data analytics trends in 2025 highlight the integration of AI, real-time analytics, and edge computing, driving smarter, faster, and more scalable solutions. These advancements are unlocking unprecedented opportunities across industries:

#1 Edge computing integration

Edge computing is about processing data closer to its source, such as IoT devices, sensors, and edge servers, instead of relying solely on centralized cloud data centers. This reduces the need to send large volumes of data to centralized cloud data centers, thereby addressing latency and bandwidth issues, which are still urgent in all big data-centered applications.

Another example is solar panel fault detection, which focuses on real-time processing of big data obtained from infrared cameras to determine any faults, like overheated spots, early.

Prospects for this trend:

- Increasing adoption in healthcare, transportation, and retail sectors.

- Development of more sophisticated edge computing devices and solutions.

- Greater integration with existing cloud infrastructure.

- Enhanced real-time analytics capabilities.

- Improved data security and privacy measures.

#2 Artificial intelligence, machine learning, and big data synergy

The synergy between AI and ML with big data involves leveraging these technologies to enhance data processing and analytics capabilities. AI and ML can automate the extraction of insights from large datasets, identify patterns, and make predictions like in case of EV data analytics.

For those interested in mastering these advanced skills, big data training courses offer comprehensive knowledge on integrating AI and ML for more sophisticated data-driven decision-making, enabling organizations to uncover insights that would be difficult to detect manually.

Prospects for this trend:

- Greater accuracy and efficiency in predictive analytics and forecasting.

- Increased personalization in customer interactions and experiences.

- Expanded use in anomaly detection and predictive maintenance.

- Development of more intuitive AI-driven data analytics platforms.

- Enhanced ability to process and analyze unstructured data, such as text and images.

You may discover how big data preparation contributes to machine learning in another blog post by Intelliarts.

#3 Advanced analytics and predictive modeling

Advanced analytics and predictive modeling in big data involve using complex statistical techniques and algorithms to analyze historical data and predict future trends. These methods help organizations understand underlying patterns, make data-driven decisions, and anticipate future events. Advanced analytics can provide deeper insights into data, while predictive modeling helps forecast outcomes based on historical data.

Prospects for this trend:

- More accurate demand forecasting and inventory optimization in supply chains.

- Enhanced ability to target marketing efforts based on predictive customer behavior.

- Improved healthcare outcomes through predictive modeling of patient data.

- Expansion into new areas such as environmental sustainability and climate change analysis.

- Development of real-time predictive models that continuously learn from new data.

#4 Real-time data processing and analytics

Real-time data processing and analytics involve continuous processing of data as it is generated, enabling immediate insights and decision-making. Big data analytics trends here mostly concern applications that require up-to-the-minute information, such as financial trading, fraud detection, and live monitoring systems, often requiring continuous scraping of websites without getting blocked to maintain real-time data feeds.

Prospects for this trend:

- Increased use in industries requiring instant data insights, such as finance, telecommunications, and e-commerce.

- Development of more robust and scalable real-time analytics platforms.

- Enhanced ability to handle high-velocity data streams from IoT devices.

- Improved customer experiences through real-time personalization and responsiveness.

- Greater adoption of streaming data technologies, such as Apache Kafka and Flink.

You may be additionally interested in exploring how big data collection works in another blog post by Intelliarts.

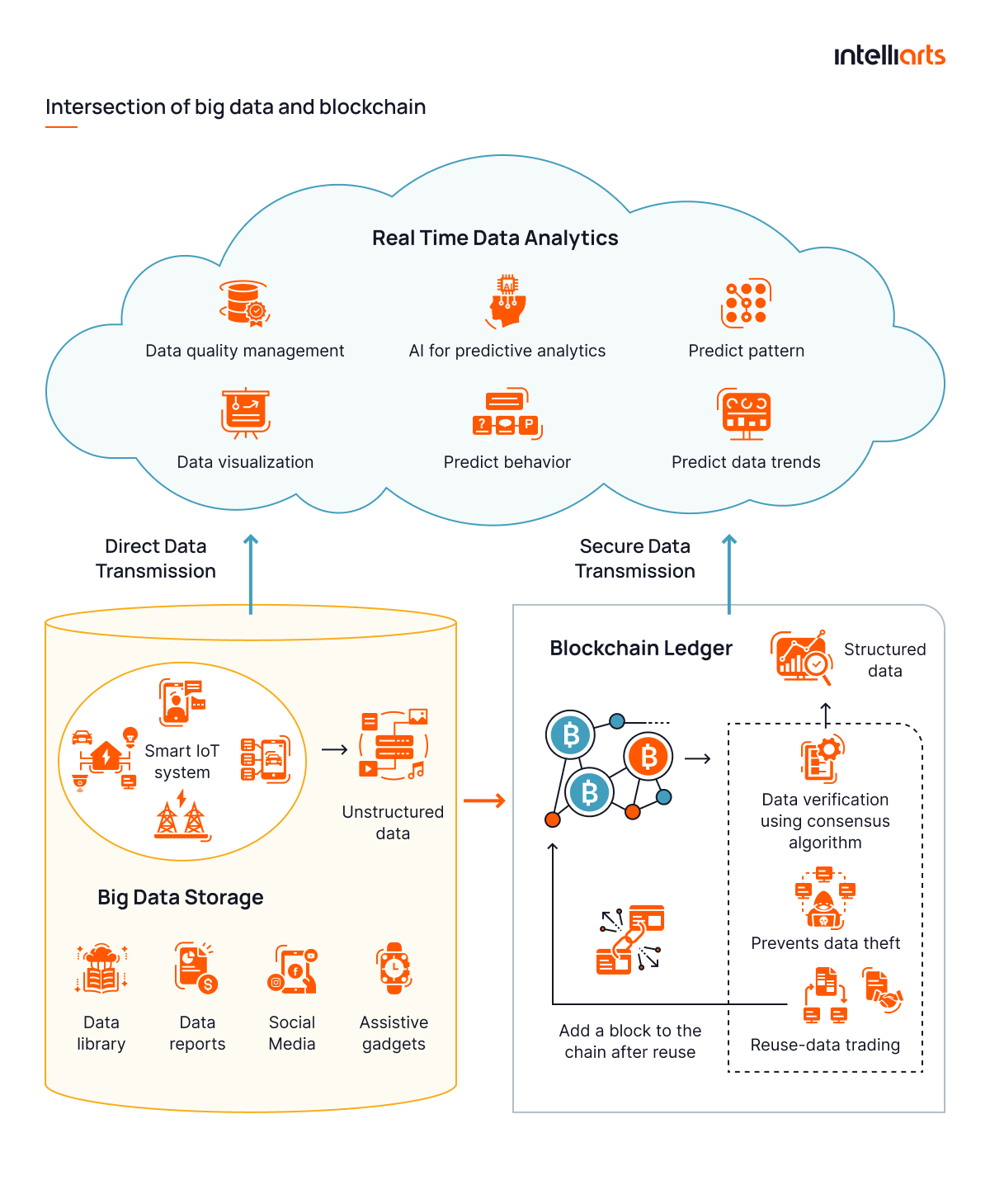

#5 Blockchain applications in big data

Blockchain technology, known for its secure and transparent nature, is being increasingly applied in big data to enhance data integrity, security, and traceability. Regarding big data, blockchain can provide a decentralized and tamper-proof ledger for recording transactions and data exchanges. This, in its turn, benefits the accuracy and trustworthiness of big data taken from blockchain-based sources. (Mind that engaging VARA license advisory support can help ensure compliance obligations are accurately understood and met, minimizing potential regulatory risks.)

Prospects for this trend:

- Increased use in sectors requiring high data integrity, such as finance, healthcare, and supply chain management.

- Development of blockchain-based data sharing and storage solutions.

- Enhanced security and privacy through immutable and decentralized data records.

- Greater transparency and accountability in data transactions.

- Integration with other big data technologies to create secure data ecosystems.

#6 Privacy and security considerations

As the volume and variety of data grow, so do the challenges of ensuring its privacy and security. Data is a valuable asset that should be protected. This trend in big data focuses on developing advanced methods and technologies to protect sensitive information from breaches, unauthorized access, and misuse. Many organizations are now partnering with the best cloud security companies to strengthen their data protection frameworks and ensure compliance with evolving privacy regulations.

Prospects for this trend:

- Increased adoption of advanced encryption methods and privacy-preserving techniques.

- Development of comprehensive data governance frameworks and policies.

- Enhanced focus on compliance with data protection regulations, such as GDPR and CCPA.

- Greater investment in cybersecurity measures to protect big data assets.

- The emergence of new technologies and approaches for secure multi-party computation and federated learning.

Data privacy and security are major concerns across industries. We aim to mitigate cybersecurity challenges by constantly upgrading our protection methods, which requires research, innovation, investment, and, of course, following current best practices in the niche — Oleksandr Stefanovskyi, an ML team lead at Intelliarts.

#7 Hybrid and multi-cloud adoption

Hybrid and multi-cloud adoption involves using a combination of on-premises, private cloud, and public cloud services to manage and analyze big data. This approach offers greater flexibility, scalability, and cost efficiency in utilizing big data overall, which is a concern of many small and medium-sized companies. Hybrid and multi-cloud adoption allows for the leveraging of the best features of each cloud environment.

Prospects for this trend:

- Increased flexibility in managing data across different cloud environments.

- Enhanced disaster recovery and business continuity capabilities.

- Better cost management through optimized use of different cloud services.

- Greater collaboration and data sharing across hybrid cloud ecosystems.

- Development of unified cloud management platforms for seamless integration.

#8 Increased usage of data lakes

The data lakehouse, a new paradigm combining the benefits of data lakes and data warehouses, is gaining traction in the big data landscape. Giants like Databricks and Snowflake lead this trend by offering solutions that provide both the scalability of data lakes and the performance and reliability of data warehouses. This hybrid approach simplifies data management and enables advanced analytics on a unified platform, eliminating data silos and reducing the need for complex data pipelines.

Prospects for this trend:

- Greater adoption of data lakehouse architectures in industries needing scalable and flexible data storage.

- Improved performance and cost-efficiency in handling structured and unstructured data.

- Enhanced real-time analytics capabilities through unified data processing.

- Accelerated integration with machine learning and AI tools for more efficient data modeling.

- Broader support for collaboration across data teams by simplifying data governance and access control.

Databricks and Snowflake are regarded as one of the largest providers of data lake solutions on the market.

#9 Data governance and compliance

Data governance and compliance are becoming increasingly important as organizations handle larger volumes of sensitive and regulated data. It’s especially critical when it comes to medical, personal, and financial data. For example, as healthcare technology trends continue to evolve, there’s a stronger emphasis on data governance to meet regulatory standards and ensure the accuracy and integrity of patient data. This trend focuses on establishing policies, processes, and controls to ensure data quality, integrity, and compliance with requirements.

Prospects for this trend:

- Increased adoption of comprehensive data governance frameworks.

- Enhanced focus on data quality and integrity to support accurate analytics.

- Development of automated compliance tools to streamline regulatory adherence.

- Greater emphasis on ethical data use and privacy protection.

- Improved transparency and accountability in data management practices.

#10 Quantum computing impact on big data

Quantum computing has the potential to revolutionize big data analytics by providing unprecedented computational power to solve complex problems. It’s difficult to project the exact development of this trend, but it’s intended to enhance data processing, optimization, and predictive modeling capabilities far beyond the capabilities of classical computing.

Prospects for this trend:

- Development of quantum algorithms specifically designed for big data applications.

- Enhanced ability to analyze and process massive datasets at unprecedented speeds.

- Improvement in optimization problems, such as supply chain management and financial modeling.

- Greater investment in quantum computing research and development.

- Collaboration between academia, industry, and government to accelerate quantum computing advancements.

#11 Sustainable and ethical big data practices

Sustainability, renewable energy, and other concerns resulted in the emergence of sustainable and ethical big data practices. As an example, a single ChatGPT conversation uses about fifty centiliters of water, equivalent to one plastic bottle. So, this trend focuses on minimizing the environmental impact of data operations and ensuring the ethical use of data by emphasizing the importance of energy-efficient data centers and responsible data sourcing.

Prospects for this trend:

- Increased adoption of energy-efficient technologies and practices in data centers.

- Greater emphasis on responsible data sourcing and ethical data use.

- Development of frameworks and guidelines for ethical AI and data analytics.

- Enhanced transparency and accountability in data practices to build public trust.

- Collaboration between industry and regulatory bodies to promote sustainable and ethical data practices.

Every successful big data project starts by creating a big data strategy. Discover how to develop it in another of our blog posts.

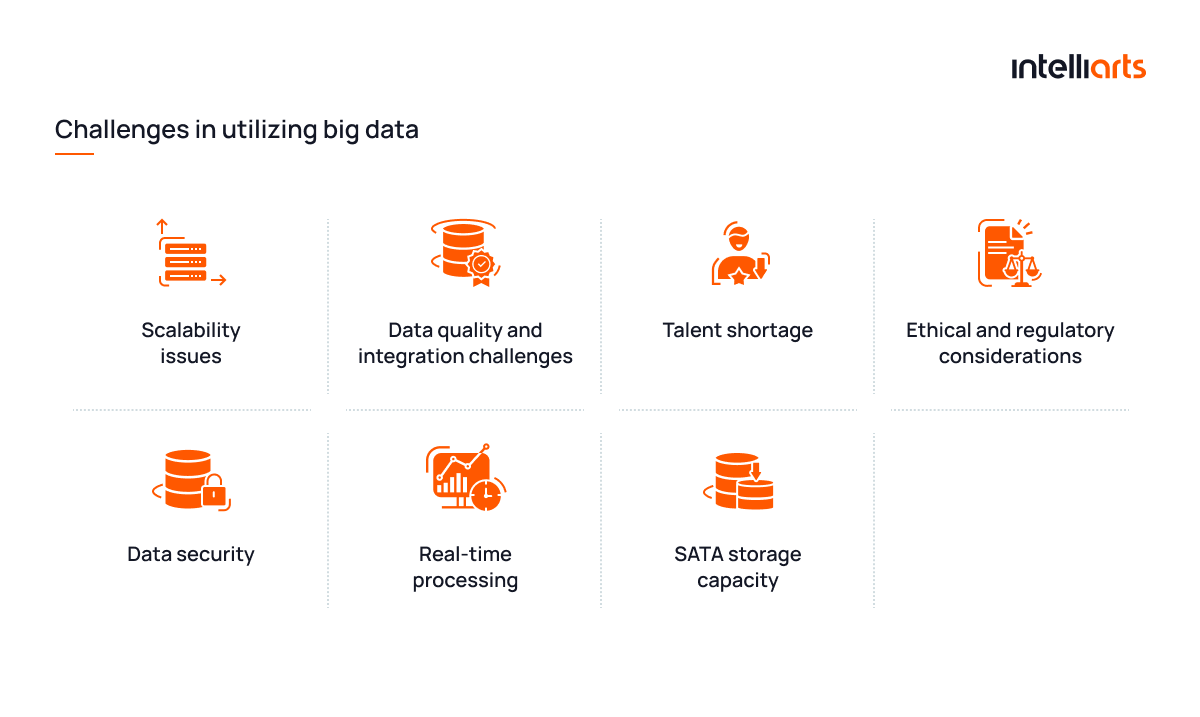

Challenges and solutions in big data

As with any other technology, the latest trends in big data analytics present both opportunities and challenges. Many companies, especially small and medium-sized ones, are hesitant about getting started with big data technology innovation. Here are some of their concerns and basic solutions to them:

#1 Scalability issues

Managing and processing vast data volumes is difficult, leading to slow performance and increased costs.

Solution: Use distributed computing frameworks and cloud-based solutions for scalable resources and efficient processing.

#2 Data quality and integration challenges

Integrating diverse data sources can result in inconsistencies and unreliable insights.

Solution: Employ data cleaning tools, robust ELT processes, and strong data governance policies to maintain data integrity.

#3 Talent shortage

A shortage of skilled professionals hinders effective data analysis and utilization.

Solution: Invest in training programs, partner with educational institutions, and leverage AI tools to fill the talent gap.

#4 Ethical and regulatory considerations

Handling personal and sensitive data requires compliance with stringent regulations.

Solution: Implement data privacy frameworks, enforce ethical guidelines, and apply data anonymization techniques.

#5 Data security

Increased data volume and access points heighten the risk of breaches and cyber attacks.

Solution: Implement advanced security measures, conduct regular audits, and establish strict access control policies.

#6 Real-time processing

Real-time data processing is challenging with large datasets, leading to delays.

Solution: Utilize stream processing frameworks and in-memory computing for faster, real-time data analysis.

#7 SATA storage capacity

Efficient and cost-effective storage of vast data volumes, especially unstructured data, is challenging.

Solution: Use NoSQL databases, data compression techniques, and hybrid storage solutions to optimize storage.

Explore big data challenges, practices, and mistakes with Bernard Marr, an author, popular keynote speaker, futurist, and strategic business and technology advisor, in the video below:

Big data use cases by industry

Here are examples of applications of big data tailored to the specific needs of businesses in particular industries:

#1 Healthcare

In healthcare, big data-based solutions are used to drive significant improvements in patient outcomes, operational efficiencies, and cost reductions.

Examples:

- Predictive analytics for patient admissions and disease outbreaks.

- Personalized medicine to tailor treatments to individual patients based on genetic and other data.

- Predictive maintenance to anticipate equipment failures and schedule maintenance.

#2 Finance

As for finance, big data-based solutions are used to enhance risk management, customer insights, and operational efficiency.

Examples:

- Fraud detection to identify and mitigate fraudulent transactions.

- Risk management solutions to assess and manage financial risks.

- Algorithmic trading to utilize big data for high-frequency trading strategies.

#3 Manufacturing

When it comes to manufacturing, big data-based solutions drive improvements in production processes, quality control, and supply chain management.

Examples:

- Predictive maintenance in manufacturing industry to anticipate equipment failures and schedule maintenance.

- Quality control solutions to monitor production processes and maintain standards.

- Supply chain optimization to improve logistics and inventory management.

#4 Retail

Here, big data-based solutions enhance customer experience, inventory management, and sales strategies.

Examples:

- Customer personalization to offer tailored product recommendations.

- Inventory management to optimize stock levels to meet demand.

- Sales forecasting to predict future sales trends and customer demand.

- Market basket analysis to understand purchasing patterns.

#5 Renewable energy

Finally, in renewable energy, big data-based solutions are used to optimize energy production, distribution, and consumption.

Examples:

- Energy forecasting to predict energy demand and supply for better grid management.

- Consumer energy management to provide insights and recommendations for reducing energy consumption.

- Predictive maintenance to schedule maintenance for wind turbines and solar panels.

- Grid management solutions to balance supply and demand in real-time.

Looking for a trusted tech partner to help implement big data in your business infrastructure and processes? Reach out to Intelliarts, and let’s discuss your needs.

Success stories of big data implementation

Here at Intelliarts, we have substantial experience with developing and implementing software solutions operating on big data. Here are two of our many related success stories:

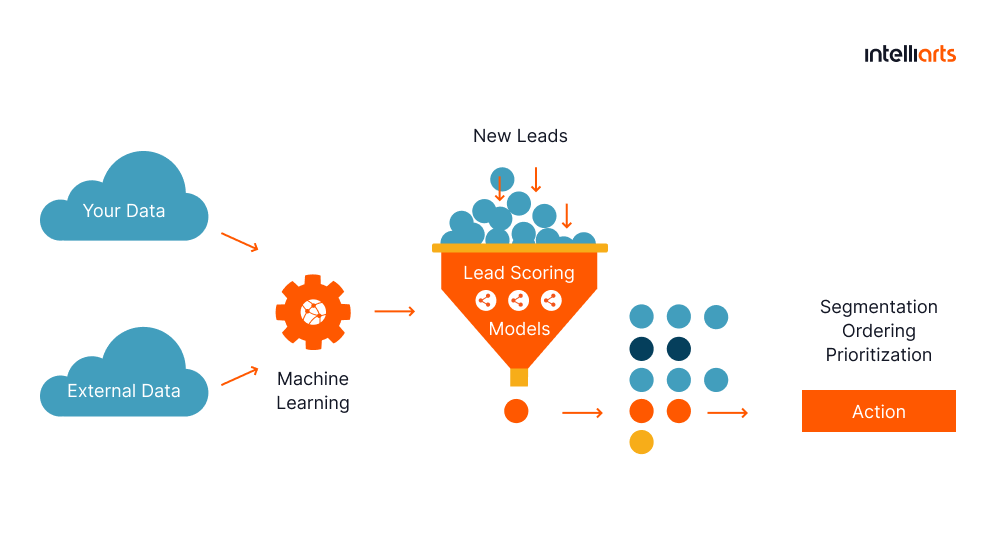

ML solution for searching and scoring leads in marketing

Challenge:

A midsize US-based insurance company (under NDA) specializing in home and auto insurance faced challenges with its traditional lead-scoring approach. With multiple lead sources and a complex sales funnel, the company struggled with labor-intensive processes and inefficient resource allocation, often wasting time on low-conversion leads.

Solution:

Intelliarts created a predictive lead-scoring model using machine learning to rank leads by conversion probability, allowing agents to focus on high probability leads. The solution was further refined with prospect prediction and integrated into the company’s systems using Amazon SageMaker.

Outcomes:

The ML-powered lead scoring model increased the insurer’s profitability by 1.5% in a few months, cutting out 6% of non-efficient leads and raising overall lead quality. The company now benefits from a data-driven approach, removing guesswork and optimizing resource use, with high-probability leads showing a 3.5 higher conversion rate than average. This transformation showcases how leveraging big data can directly impact business efficiency and profitability.

Explore ML solution for searching and scoring leads case study in full.

All-purpose big data platform for data marketplace

Challenge:

DDMR, a US-based market research company specializing in clickstream data, needed a robust solution to handle the growing complexity and volume of their data. The challenge was to develop an end-to-end big data pipeline that could efficiently manage, process, and transform massive datasets into actionable insights.

Solution:

As part of our data engineering consulting services, Intelliarts developed an end-to-end big data pipeline for data collection, storage, processing, and delivery using Databricks, AWS, Spark, and Snowflake. We redesigned the infrastructure for scalability and reliability, optimized storage costs with a hot and cold data approach, automated DevOps processes, and enhanced data security to comply with GDPR.

Outcomes:

The new pipeline enabled DDMR to process large volumes of data daily and translate them into actionable insights. With our solution, the company multiplied its revenue several times and experienced an efficiency boost. Besides, DDMR expanded its customer base and improved data accessibility, leveraging newly acquired big data capabilities.

Explore big data platform case study in full.

Future trends and predictions for big data

Beyond the listed trends, it’s possible to predict several other changes inflicted by the rise of big data utilization:

- Expansion of data literacy: As big data continues to grow in importance, there will be an increased focus on data literacy across organizations. Employees across all levels will need to understand how to interpret and utilize data insights to make informed decisions.

- Data monetization models: Organizations already view data as an asset that can be monetized. New business models may emerge around data marketplaces, where data owners can trade datasets.

- Unified data platforms: Potentially, we should expect the industry to head towards developing unified data platforms that integrate various data sources, structured, unstructured, and semi-structured, into a single environment. These could be both in-house business solutions and B2B products.

As for the union of big data with other technologies, the following scenarios are possible:

- Faster 5G networks will boost data transmission and connect more IoT devices, creating massive real-time data for advanced analytics.

- Blockchain will become a standard and ensure secure, transparent, and trusted data sharing across entities.

- NLP advancements will enhance machine understanding and analysis of human language in text-heavy data.

- New synthetic data generation approaches will help to create high-quality, privacy-safe datasets for training machine learning models without using real data.

- Digital twins will be used extensively to simulate, predict, and optimize processes using virtual replicas of physical entities with big data analytics.

You may be interested in exploring how to use digital twins in manufacturing in another blog post by Intelliarts.

Final take

Big data industry trends for 2025 and beyond promise enhanced data-driven decision-making, optimized operations, and better customer experiences. They include:

- Edge computing integration

- Artificial intelligence, machine learning, and big data synergy

- Advanced analytics and predictive modeling

- Real-time data processing and analytics

- Blockchain applications in big data

- Privacy and security considerations

- Hybrid and multi-cloud adoption

- Data governance and compliance

- Quantum computing impact on big data

- Sustainable and ethical big data practices

It’s essential to understand that keeping up with every big data trend while resolving related challenges is a complex task that requires substantial AI and ML expertise that businesses can acquire from external vendors.

Entrust the implementation of your big data project to Intelliarts. With more than 24 years in the market, serving as technology consulting firm and delivering big data, software development, high tech, and other solutions, we are ready, willing, and able to help you get the most out of your data.

FAQ

1. What challenges does the integration of quantum computing bring to big data?

Quantum computing introduces challenges in data encryption and security, requiring new big data future technology innovations to protect against quantum attacks. It also demands scalable quantum algorithms for processing and storing massive datasets, influencing the future of big data analytics trends.

2. How do companies address data privacy in the era of big data?

To address privacy concerns in big data trends for 2025 and beyond, companies use innovative big data technologies like differential privacy, homomorphic encryption, and decentralized data models. These techniques ensure compliance with regulations and protect user data in the future of big data.

3. In what ways is big data contributing to sustainability efforts?

Big data trends in business promote sustainability by optimizing resource management, improving supply chain transparency, and enhancing predictive analytics for renewable energy usage, reflecting the future of big data’s role in supporting environmental goals and sustainable practices.