Solution Highlights

- Built scalable AWS infrastructure that handles 8 terabytes of data from 4 different providers

- Successfully migrated to a new data provider to improve data accuracy

- Retrained 150+ ML models using new, more accurate data sources

- Aggregated 150+ reports into one, improving the efficiency of model performance evaluation

About the Project

Customer:

Our customer (under NDA) is an innovative AI-driven real estate company, which grew from a small startup into a major player in the industry. By leveraging analytics, data filtering, and predictive modeling, the company forecasts market trends and generates over $7 million quarterly in machine learning (ML) services. Their success is powered by substantial investments in data, with an annual spend of $700,000 on data providers.

Our partnership with this customer started in June, 2022 when we successfully implemented three machine learning models tailored to their needs:

- Realtor model to predict the likelihood of a property being sold within the next few months

- Discount model to assess whether the predicted discount on a property is underestimated or overestimated

- Investor model to predict the relevance of property for the investor market

Challenges & Project Goals:

After the three models were put into production, our partner reached out with a request for automation and further model enhancement. Specifically, they wanted to:

- Optimize infrastructure: Streamline the management of a vast array of project data, files, codebases, and models to ensure operational efficiency

- Enhance data accuracy: Transfer to new data providers to improve accuracy of the data used in their predictive models

- Improve model visibility: With over 150 models in production generating separate results, manually evaluating these reports each month had become unmanageable. The customer asked for a solution to consolidate and better visualize model predictions for quicker, more effective decision-making.

This project aimed to address these business challenges and ensure scalability as our partner’s business continued to grow.

Solution:

To address the customer’s challenges, Intelliarts implemented a comprehensive solution focused on automation, AWS infrastructure optimization, and model improvement:

- We designed and built the entire real estate AWS infrastructure to streamline the machine learning and data processing workflow. This encompassed everything from integrating third-party data sources to storing, processing, and preparing data for model training. The infrastructure also covered training the ML models and submitting predictions to customer portals. All this allowed the customer to efficiently manage their vast data resources and ensured smoother operations throughout their entire pipeline.

- To support the transition to more accurate data providers, we executed a complex database migration, ensuring it complied with the best industry practices. Following the migration, we retrained all 150+ ML models with the new data to increase predictive accuracy, optimizing our partner’s ability to generate reliable forecasts.

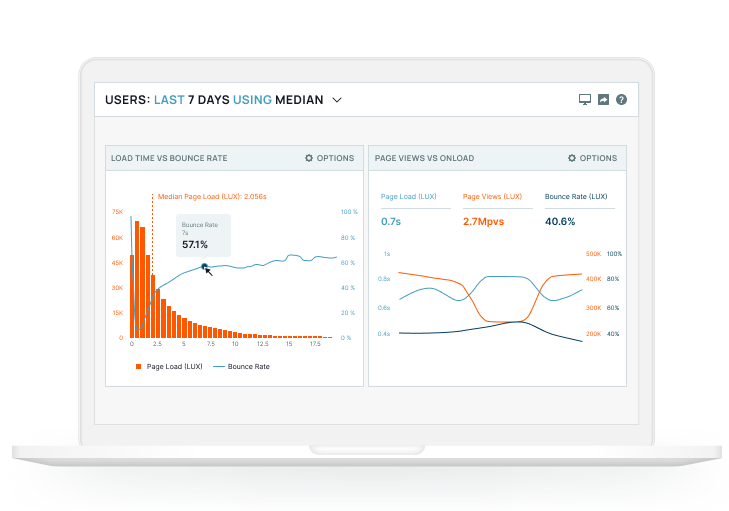

- For model visibility improvement, our data scientists consolidated all model reports into a single dashboard, analyzing key performance metrics such as AUC and recall. The best-performing models were prioritized for marketing initiatives, while underperforming models were flagged for retraining and optimization. This approach enhanced overall visibility into model performance and allowed for targeted improvements.

Technology Solution

End-to-end data and model infrastructure

After implementing the realtor, investor, and discount models, we optimized the entire infrastructure to support large-scale automation and scalability. This involved improvements for data ingestion from third-party firms, with pipelines for processing, storing, and analyzing this data. Our data engineering team was also responsible for optimizing real estate infrastructure used for training the ML models, feeding them with new data for generating predictions, and the process of submitting results to the customer’s portal using OpenSearch.

Our team used a suite of Amazon SageMaker services to build a highly efficient, flexible infrastructure. Here’s a breakdown of how each AWS service played a critical role in our solution:

- Sagemaker Model Registry served us well to manage model versions. It allowed us to seamlessly track which models had been trained, evaluated, and been ready for deployment. This service streamlined the process, enabling our ML engineers to distinguish between production-ready models and those that required further tuning or were obsolete. The clear versioning helped us reduce deployment errors and sped up the production cycle.

- We used SageMaker Studio for accelerating model analysis and deployment. It helped us to easily move models into production and directly interact with other Amazon services.

- SageMaker Processing was our primary tool for data engineering tasks, such as data preprocessing and model evaluation at scale. This tool allowed our data engineers to run data processing workloads at scale, with support for distributed data processing via Apache Spark. The built-in parallel processing capabilities allowed us to complete large-scale data transformations faster, which in turn reduced model training times.

- We leveraged SageMaker Model Training to carefully fine-tune and optimize models. It was very productive for detailed training, which refers to developing, fine-tuning, and optimizing ML models to ensure they perform accurately and reliably. Besides, the Hyperparameter Optimization (HPO) feature enabled us to automatically search for the best combination of hyperparameters, which was essential for improving the performance of complex models.

- The Model Class in SageMaker helped our team store vital information about the model, including its location, deployment instructions, and instance requirements. This feature allowed our team to streamline deployment by having all necessary information in one place, simplifying the model delivery process.

- SageMaker Transformer Jobs were crucial for scaling up model inference tasks. By duplicating the same model across multiple instances, we processed vast amounts of data simultaneously. For this project, we performed batch predictions online, per request, and once a month. And the ability to scale model inference improved prediction times dramatically, completing the entire pipeline in just 2 hours.

- Processing Jobs were key to running both data pre- and post-processing and model evaluation tasks on fully managed infrastructure. By choosing appropriate instance sizes and leveraging custom Docker images, we optimized the execution of these jobs and significantly reduced costs for the customer.

- MWAA (Amazon Managed Workflows for Apache Airflow) was critical for orchestrating our workflows, managing nearly 20 pipelines. These included data engineering, backend, and machine learning pipelines. Its automation capabilities helped us streamline end-to-end operations, ensuring that data flowed smoothly from ingestion to model training and deployment.

This infrastructure enables real estate agencies, the customer’s end-users, to access the company’s subscription products and view AI-powered predictions directly through the portal. This setup ensures seamless data flow and rapid model inference, providing insights that drive actionable business outcomes.

Database migration

After nearly two years of collaboration on this project, our partner decided to change their data provider to enhance data accuracy. This decision led to a complex real estate database migration that we had to execute with utmost efficiency, given lots of associated risks, including data loss or corruption, system downtime, and potential reductions in performance.

Apart from high risks, several other factors made this data source migration more challenging:

- At some moment, our team had to support both the legacy and new database versions. We implemented robust mechanisms for data synchronization and validation, as well as performed comprehensive testing to identify and resolve any discrepancies between the two databases. All these measures had to ensure the transition to the new database was seamless for end-users.

- We also had to make sure the critical components of the new database, such as client or property identification, were the same as those of the old one. Any discrepancies in these could have affected the integrity of data and model performance.

- A significant challenge was the need to retrain all 150+ ML models from scratch using the newly sourced data. Here we also had to make sure that the new data sources meet the same stringent requirements as the previous ones: reliability, availability, and consistency. We worked diligently to combine these three requirements to optimize model performance, leveraging advanced techniques to fine-tune the models to the new data landscape.

Our data scientists still managed to mitigate these risks, compiled with the industry best practices, and achieved outstanding results in this data source migration.

Model visibility improvements

As the project scaled, one of the key challenges was managing the visibility of over 150 ML models in production. Each model generated individual reports every month, so it was impossible for the customer to review this overwhelming amount of data. Our goal was to streamline this process and provide actionable insights more efficiently.

To address this, our data scientists aggregated all the model reports into one. This allowed us to focus on key performance metrics, such as:

- AUC (Area Under the Curve) as the metric to compare performance of different models

- Recall that measures how the model’s ability to correctly identify true positives

Based on these metrics, we ranked the models, selecting the top 10 high-performing models for marketing and customer engagement purposes. At the same time, we identified the 10 lowest-performing models and prioritized them for retraining and optimization. This systematic approach helped to improve the accuracy of predictions but also increased model visibility.

Business Outcomes

For both our customer and the Intelliarts team, this project marked a successful cooperation. Here are several key milestones:

- The number of our partner’s clients grew from 5 to over 200

- Revenue reached $5-7 million per quarter, depending on the time period

- Over 2000 models were trained throughout the project, with 150+ models actively running in production

- At peak data usage, our team worked with 8 terabytes of data sourced from 4 different providers

- We developed 3 PoC (Proof of Concept) models, all of which were successfully implemented

- 3 PoC models were created, and all 3 were implemented

- The infrastructure used around 15 AWS services, 7 of which were directly related to machine learning

This project’s success was driven by the critical infrastructure improvements and data migration described in this case. With the newly optimized AWS real estate infrastructure, our partner now benefits from a scalable, automated system capable of handling vast amounts of data. Besides, we managed to reduce the time needed for data processing and model deployment and save operational costs.

The successful migration to a new data provider resulted in more accurate data feeding into the models, further boosting the quality and reliability of predictions. This migration was carried out seamlessly, ensuring no downtime or data loss, while also retraining over 150 models using the new data.

Lastly, the improvements in model visibility significantly streamlined the reporting process. By aggregating and ranking model performance based on key metrics such as AUC and recall, the customer can now focus on the most effective models for marketing purposes while also optimizing the underperforming models.