To show the growing importance of RAG solutions, Databricks, a global data and AI analytics company, reports that 60% of their LLMs use RAG. The high potential of this technology, given the high popularity of AI in general, is what makes it worthy of good consideration on a business level.

Currently, AI-driven solutions are of huge popularity across businesses and industries. Retrieval Augmented Generation (RAG) is exactly the technology that offers better AI performance, whether it’s a common chatbot or an enterprise-level solution for data analytics.

In this post, you’ll discover the common enterprise RAG system implementation strategy, its architecture pattern, key concepts, and related considerations. Besides, you’ll obtain an overview of the 10 best practices for utilizing and integrating RAG systems in enterprises.

Common architecture pattern of RAG systems for enterprise

RAG systems are AI models that combine information retrieval and text generation to provide more accurate and contextually relevant responses.

RAG systems are often employed in Large Language Model (LLM) solutions, like ones based on ChatGPT or Claude AI where they aid in retrieving relevant information from large datasets or documents needed for handling complex queries. In tasks requiring up-to-date or domain-specific knowledge, such as customer support, research, and enterprise applications, RAG systems offer unmatched response accuracy improvement.

The RAG technique is well-suited for Natural Language Processing (NLP) applications, as it combines retrieval-based methods with generation-based models. It allows for effectively harnessing the strengths of both approaches.

Different embedding models can yield varying results. Some models might capture the overall style or intent of a question, while others may focus on individual words rather than the actual meaning. Experimenting with multiple embedding models is beneficial to identify which best aligns with your intended results.

— Volodymyr Mudryi, AI, ML, and data science expert at Intelliarts.

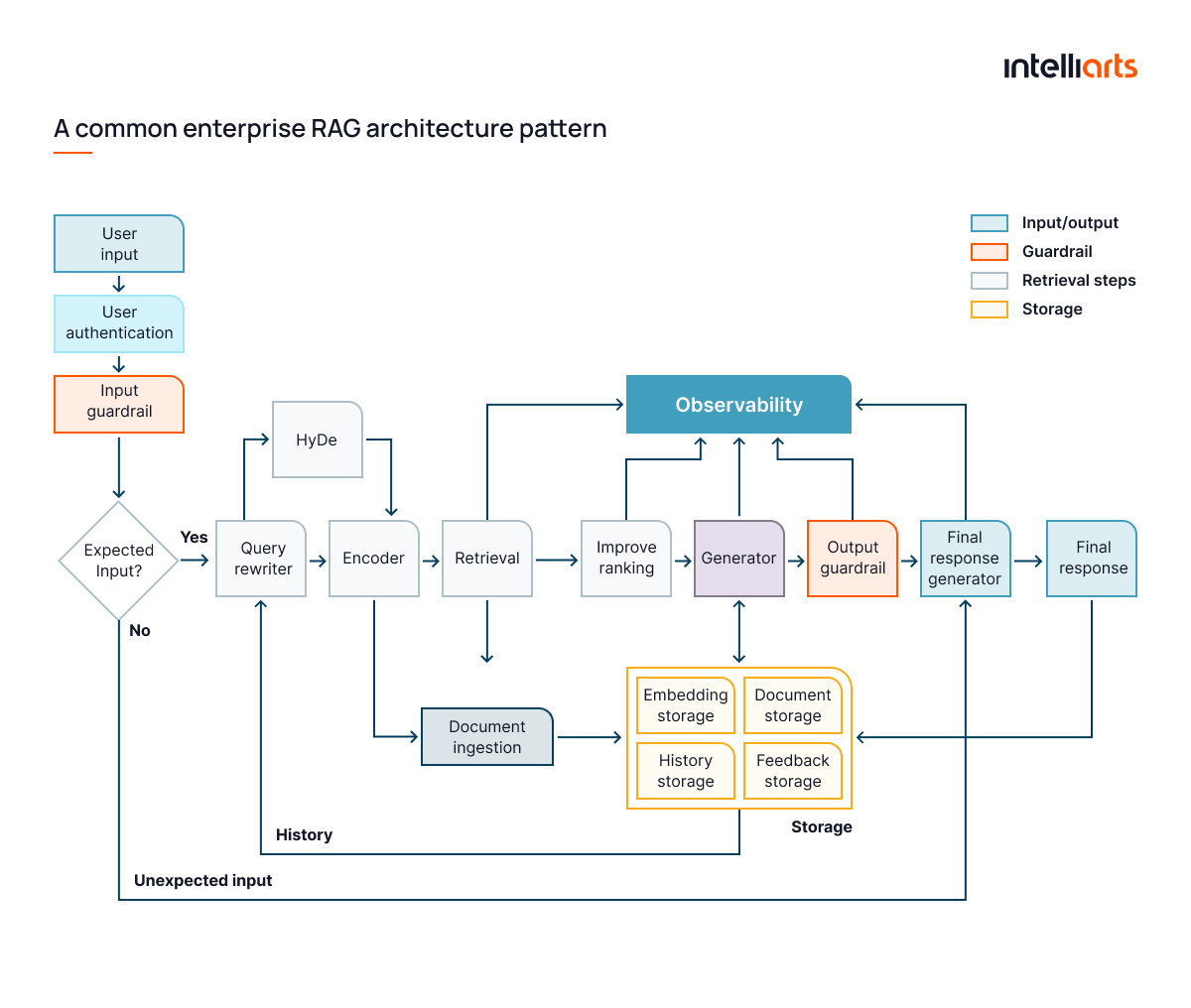

The infographics depicting a common pattern for enterprise architecture for RAG is shown below:

Basically, what the demonstrated architecture of the advanced Retrieval Augmented Generation model reveals is that RAG handles user queries with enhanced security, retrieval, and response generation processes. Starting from user input, it follows steps including authentication, input guardrails, and query rewriting. It utilizes components like encoders, retrieval systems, and ranking to refine information from stored data (embedding, document, history, and feedback storage).

This enterprise RAG architecture pattern can be broken down into these RAG workflow steps:

- User input and authentication

- Input validation with guardrails

- Query rewriting and encoding

- Information retrieval from storage

- Ranking and response generation

- Output validation with guardrails

- Final response delivery to the user

You can explore additionally what RAG models are and how they are used in another blog post by Intelliarts.

The list of benefits of RAG system in large-scale organizations SLAs (service-level agreements) in enterprises is shown in the image below:

10 best practices for using Retrieval Augmented Generation (RAG)

Let’s have an in-depth insight into the best practices for RAG system implementation, based on real practical experience of engineers from Intelliarts:

#1 Provide context on how the output was generated

In enterprise RAG systems, providing context around generated outputs is essential for building user trust and transparency. By offering users insight into the sources of information, they can verify data accuracy, which is especially valuable in sensitive areas like compliance or customer support. Intelliarts often integrates source attribution in RAG solutions, allowing users to track data origins in real time, enhancing credibility and confidence in the system.

#2 Feed the LLM product integration data

Integrating data directly from enterprise tools like CRM or ERP systems is crucial for RAG systems to provide contextually relevant and accurate responses. This integration allows the RAG system to deliver responses tailored to the business’s unique needs. Intelliarts regularly incorporates data from internal systems, ensuring real-time insights and improved response relevancy, which is especially beneficial in customer support and operations management.

#3 Continuously evaluate the outputs to spot issues and areas of improvement

To maintain optimal performance, RAG systems should undergo regular evaluations, focusing on consistency, load handling, and edge cases. This approach ensures that potential issues are quickly identified and addressed. At Intelliarts, we apply rigorous testing methods in all custom RAG implementations, allowing us to refine systems continuously and uphold performance standards, even under varying operational conditions.

#4 Utilize dynamic data loading/updating

Dynamic data loading ensures that RAG systems operate with the latest information, preventing outdated data from affecting response accuracy. This capability is crucial in fast-paced sectors where information constantly changes. Intelliarts integrates real-time data loading into RAG systems, allowing users to interact with the most up-to-date insights, which enhances response quality in areas like finance and customer support.

#5 Maintain hierarchical document structure

Keeping a hierarchical structure in documents enhances RAG systems’ ability to retrieve relevant information accurately. Organizing content with clear headings and subheadings ensures that retrieval focuses on contextually appropriate sections. This structure not only boosts response accuracy but also enables more meaningful, layered responses, especially important for industries dealing with complex data.

#6 Implement a thoughtful chunking strategy

Chunking documents into smaller parts improves retrieval efficiency but can create challenges if relevant information is split across chunks. To optimize chunking, use document structures like sections or paragraphs and experiment with an embedding-based strategy, like chunk size or overlapping, to balance information granularity and processing efficiency, thus enhancing RAG performance.

#7 Optimize retrieval quality with relevance scoring

Relevance scoring prioritizes the most applicable data during retrieval, improving the precision of responses. By aligning retrieved data closely with user queries, RAG systems deliver more contextually accurate answers. Intelliarts employs relevance scoring in enterprise RAG solutions like retrieval-based chatbots, especially for applications requiring precise data interpretation, such as regulatory compliance and financial analysis.

#8 Establish feedback loops for continuous learning

RAG system integration with feedback loops adapts over time, learning from user interactions to enhance response quality and accuracy. This continuous learning approach is key to meeting evolving business needs. Intelliarts integrates user feedback into RAG systems, refining data retrieval accuracy based on real-world usage, which is particularly beneficial in applications with dynamic requirements like customer service.

#9 Use version control for data sources and models

Version control is critical in RAG systems to manage changes to data sources and model configurations, especially in industries requiring strict audit trails. Intelliarts incorporates version control into enterprise RAG solutions, allowing for consistent tracking and updates, which supports data integrity and compliance in sectors like finance and legal.

#10 Use ensemble techniques to reduce bias

Combining outputs from multiple models through ensemble techniques helps reduce variance, making the system more robust. Intelliarts employs this approach in enterprise RAG systems, ensuring balanced insights that are especially valuable in decision-making contexts. This technique supports unbiased results, crucial for sectors like human resources and customer support, where fair responses are essential.

RAG strategy for enterprises and an overview of main concepts and considerations

As mentioned previously, RAG is an extension of an AI-driven system. So, should you ask yourself how to how to implement RAG in enterprise, then here’s a best practice for enterprise RAG system implementation step-by-step:

- Select the AI model that’s suitable for an intended purpose

- Integrate RAG mechanism

- Define data sources and structure

- Establish query-response flow

- Implement feedback and monitoring mechanisms

- Ensure data security and compliance

- Scale and maintain the system

Since the steps to implement RAG systems in enterprises are now understood and AI insights obtained, we may proceed with reviewing key concepts and considerations related to this process. They are provided in the table below:

The step-by-step process of installing RAG in your business and a consideration of enterprise RAG system integration key concepts should give you valuable insights into RAG and help you form your RAG development needs. (To ensure your RAG deployment is secure and compliant, enterprises can also leverage AI pentesting tools to automatically detect vulnerabilities, misconfigurations, and exposed data, providing an added layer of protection.)

Intelliarts experience with RAG

Here are several case studies by Intelliarts revealing RAG business development cases:

Analysis report generation with RAG-based system success story

The Intelliarts team is working on an ongoing project for a customer who handles massive amounts of data to provide actionable insights into China’s political economy. They have an extremely large data pool in PDF format. Currently, analysis is provided by domain experts, and a single request may take as long as an hour. So, there’s a crucial need for automation and process improvement. Here’s how this case unfolds:

Challenge: To create a RAG-enhanced AI solution that will be able to execute all sorts of summarization and data retrieval procedures. A development difficulty, in this case, is an inappropriate structuring and storage of the original data pool and lots of unfitted data.

Solution: A custom RAG system that contains guardrails and a query refiner, retrieval functionality, and a vector database. It can generate responses to users’ queries, return sources, and tailor responses based on images contained in PDF files from the storage.

Result: Currently, the time it takes to respond to user’s request is shortened from hours to minutes. Still, since the project is ongoing, we look forward to refining the RAG system and bringing even more value.

Looking for a trusted tech partner to provide you with AI development services? Let Intelliarts address your business needs.

RAG-enhanced AI recommendation system development success story

The Intelliarts team partnered on a project to develop an AI-powered recommendation system, the “step suggester,” to assist students in cybersecurity coursework. This tool offers real-time guidance to students facing challenges, improving course completion rates.

Challenge: Create a RAG-enhanced AI system that analyzes student actions in a virtual environment, delivering tailored recommendations to reduce dropout rates. The main challenge was structuring and analyzing large volumes of unorganized data while incorporating cybersecurity insights.

Solution: Intelliarts built a PoC for an AI assistant combining machine learning, LLMs, and programmatic algorithms. The system identifies points where students get stuck and provides actionable steps, leveraging custom algorithms and LLM responses for each task.

Result: The AI agent reduced support time and improved course completion rates. The PoC confirmed the solution’s feasibility, setting the stage for full-scale deployment across 700 course tasks.

RAG-enhanced ChatGPT chatbot creation success story

The Intelliarts team developed an AI chatbot data extraction solution using ChatGPT to help an NGO quickly retrieve information on gun safety from their extensive knowledge base.

Challenge: The NGO’s growing data made it challenging for employees to find relevant information efficiently. They needed a solution to extract data quickly from their knowledge base.

Solution: Intelliarts created a ChatGPT-powered chatbot that performs data extraction and analysis on user queries, leveraging RAG methods. The chatbot was trained to focus on gun safety topics and provide references to support its responses. This system integrates both textual and tabular data handling, using advanced ML features for accurate and contextually relevant answers.

Results: The chatbot reduced information retrieval time from hours to minutes, saving significant operational time. It also aligned with the NGO’s tone, allowing employees to streamline their workflow, improve productivity, and improve decision-making with the RAG system. The AI assistant is seamlessly integrated into the NGO’s system, providing a user-friendly, personalized solution.

Final Take

RAG systems offer enterprises advanced capabilities in data retrieval and context-aware response generation, making them invaluable across applications like customer service, content generation, and compliance. By following best practices, businesses can unlock the full potential of RAG systems. Understanding what is the best available RAG implementation for your specific needs ensures that you harness the technology’s full potential.

For enterprises looking to leverage RAG, partnering with experienced AI developers can streamline implementation and drive impactful results aligned with business goals. Here at Intelliarts, we have substantial in-house AI development expertise. Over the span of 24 years on the market, we have developed a number of fully-fledged AI solutions, including ones reinforced with RAG systems.

FAQ

1. How can a RAG system improve our data retrieval processes?

An enterprise RAG system enhances data retrieval by combining information retrieval with text generation, delivering more accurate, context-aware responses. This system efficiently processes large datasets, providing relevant results quickly, which can significantly boost decision-making and streamline information access across various enterprise applications.

2. What are the costs involved in implementing a RAG system?

Enterprise RAG system implementation costs depend on factors such as data complexity, integration with existing infrastructure, and customization needs. Costs typically cover RAG system software for enterprises, hardware, data storage, and maintenance. We provide a tailored assessment to determine precise costs for your specific use case.

3. How does your company ensure the robustness of RAG systems over time?

We ensure the robustness of RAG systems for enterprises by implementing continuous monitoring, regular updates, and retraining models to adapt to evolving data. Our custom LLM development service includes the best tools for enterprise RAG system implementation, performance tracking, and error handling, ensuring reliable long-term operation and adaptability to your business needs.