Business information is a primary source of insights. But how do companies translate data points collected or recorded at regular time intervals into strategic actions and automate this analytics? Machine learning for time series is the answer.

The global machine learning market is projected to reach $113.10 billion by 2025 and further grow to $503.40 billion by 2030. With 42% of enterprise-scale companies reporting the use of AI in their business operations, the application of fairly popular analytical methods like time series analysis is becoming more common.

In this post, you’ll explore traditional statistical methods and find out why machine learning for time series is a superior approach to them. Besides, you’ll learn the most commonly used machine learning approaches and find out how to choose the right one. Finally, you’ll review several use cases of time series machine learning and examine best practices for implementing them.

Traditional statistical methods in business and why they are not enough

At all times, businesses have relied on data heavily to obtain useful insights and spot lucrative opportunities. Uncovering patterns in data is exactly what business statistics is used for. So, before delving into machine-aided statistics, let’s recall traditional approaches.

All models are wrong, but some are useful. — George E. P. Box, a British Statistician

Traditional statistical approaches and their usages

Main statistical approaches used to analyze data like sales trends, demand patterns, seasonality, etc. include:

#1 ARIMA (AutoRegressive Integrated Moving Average)

- Use: Effective for univariate time series with trends or seasonality after differencing.

- Strengths: Good interpretability, handles trend components well.

- Limitations: Assumes linearity, sensitivity to outliers, poor performance with non-stationary or multivariate data.

#2 Naive Method

- Use: Baseline model assuming the next value equals the last observed one.

- Strengths: Extremely simple, useful for benchmarking.

- Limitations: Poor accuracy in complex or volatile datasets.

#3 Holt-Winters (Triple Exponential Smoothing)

- Use: Models level, trend, and seasonality for short-term forecasts.

- Strengths: Easy to implement, good for seasonal data.

- Limitations: Limited to univariate time series, sensitive to anomalies.

#4 Exponential Smoothing

- Use: Weights recent observations more heavily for short-term forecasting.

- Strengths: Fast, interpretable, and effective for stable series.

- Limitations: Ineffective for complex seasonality or long-term forecasting.

#5 Linear Regression

- Use: Predicts future values based on time and external regressors.

- Strengths: Clear relationships, easy diagnostics.

- Limitations: Assumes linear trends, a poor fit for complex temporal patterns.

Explore what time series analysis is and real-life case studies of its implementation in another blog post by Intelliarts.

Reasons to opt for ML approaches for time series

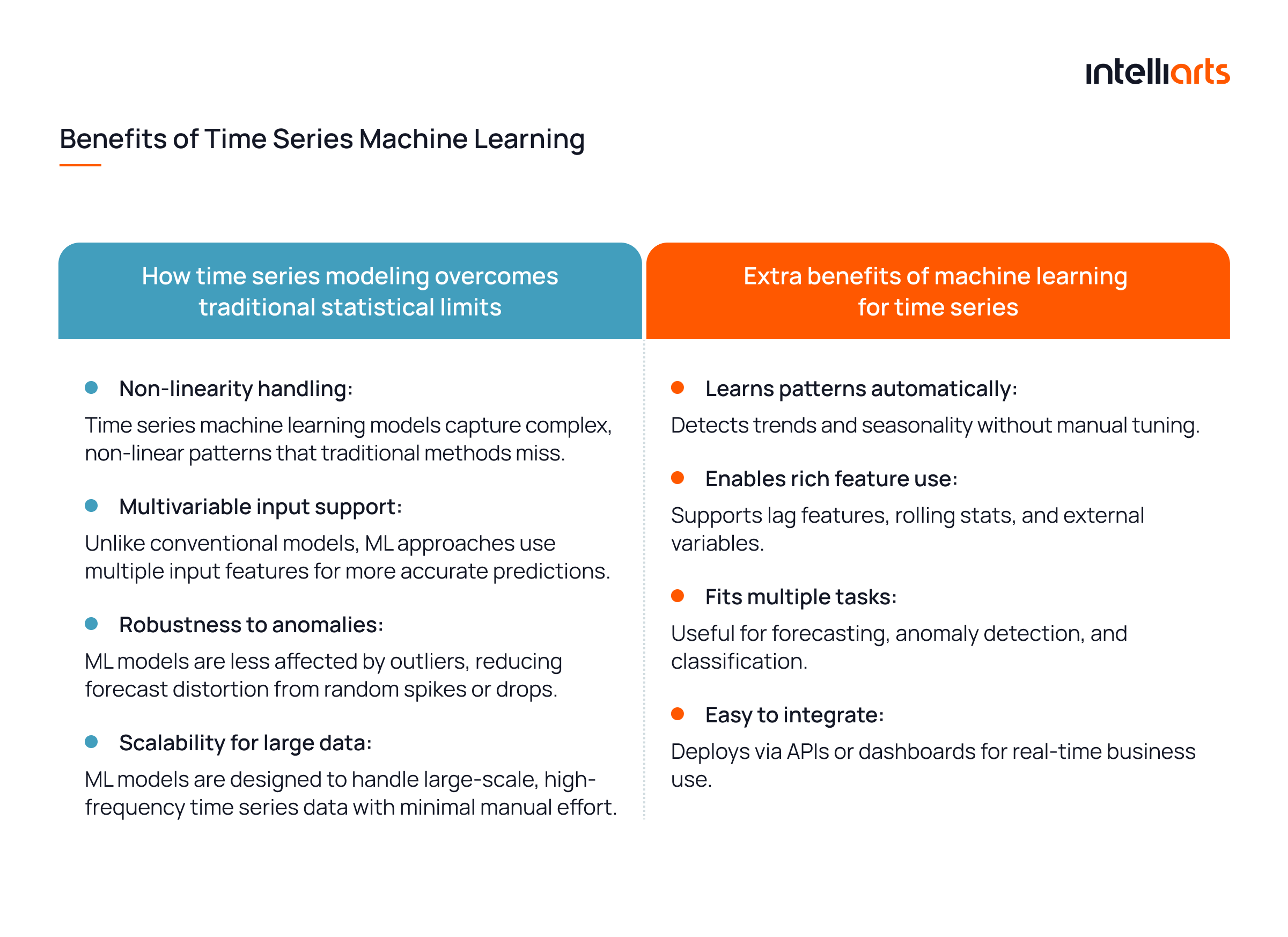

From the limitations detailed above, we can draw several conclusions that indicate the weaknesses of traditional statistical methods.

- Linearity assumption by default: Traditional models fail to model non-linear patterns.

- Natively univariate focus: Conventional models are not designed to handle multiple inputs natively.

- High outlier sensitivity: Small anomalies and noise in data can skew predictions and lead to major inaccuracies.

- Low scalability potential: Traditional approaches are complicated to scale to suit large-scale or high-frequency data, especially when operated by a human analyst.

Note. Aside from the mentioned weaknesses, it’s also worth mentioning the human factor. After all, in traditional approaches, analysts still choose exact data sequences and statistical models themselves. In circumstances when data is vast and complicated, and outcomes from using different models contradict each other, even experienced specialists struggle to interpret results correctly.

Ways how time series models in machine learning address those limitations are provided in the infographics below:

Core machine learning approaches for time series

Out of the numerous time series machine learning models and approaches, there are several suitable for time-series analysis. Let’s delve into the most common of them one by one:

#1 Supervised Learning models

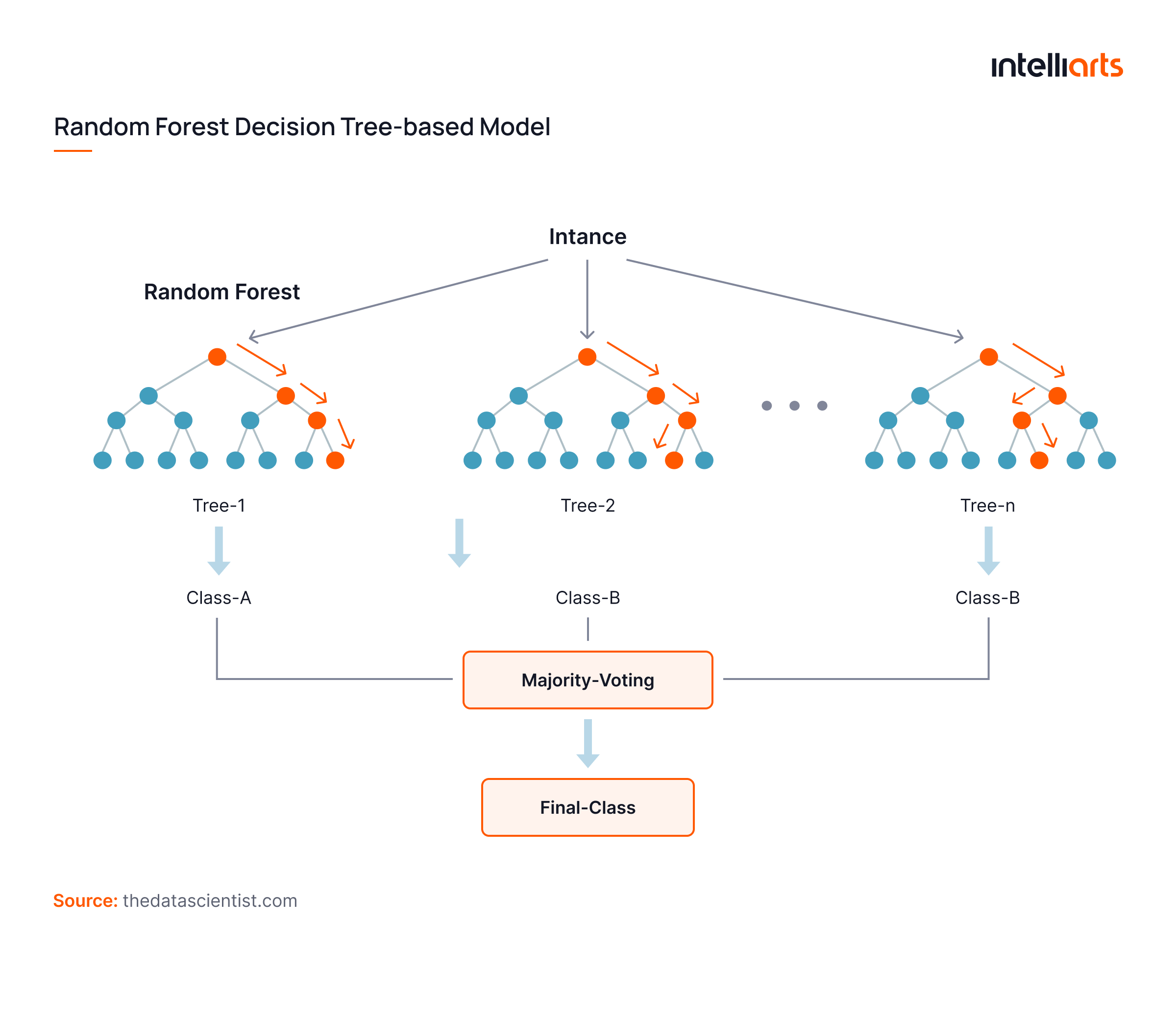

These models use labeled historical data and engineered features (like lags and rolling means) to predict future values. Popular approaches include decision tree-based models, linear regression, and support vector regression. As an example:

Example: Decision Tree-based Models (Random Forest, XGBoost)

How it works: Converts time series into a tabular format and fits a model.

Strengths:

- Handles non-linearity and outliers

- Works with multivariate features

- Interpretable (especially trees)

Weaknesses:

- Requires manual feature engineering

- Not sequential by design

- Limited long-term memory

Best for: Sales forecasting, demand planning, financial time series

Explore the machine learning in demand forecasting success story by Intelliarts for an extra insight into our experience and expertise. It reveals how exactly our software development team handles such complex projects from businesses worldwide.

#2 Deep Learning approaches

These models learn directly from sequences without manual feature engineering, capturing complex patterns over time. This includes RNN-based models (LSTM, GRU) and convolutional networks.

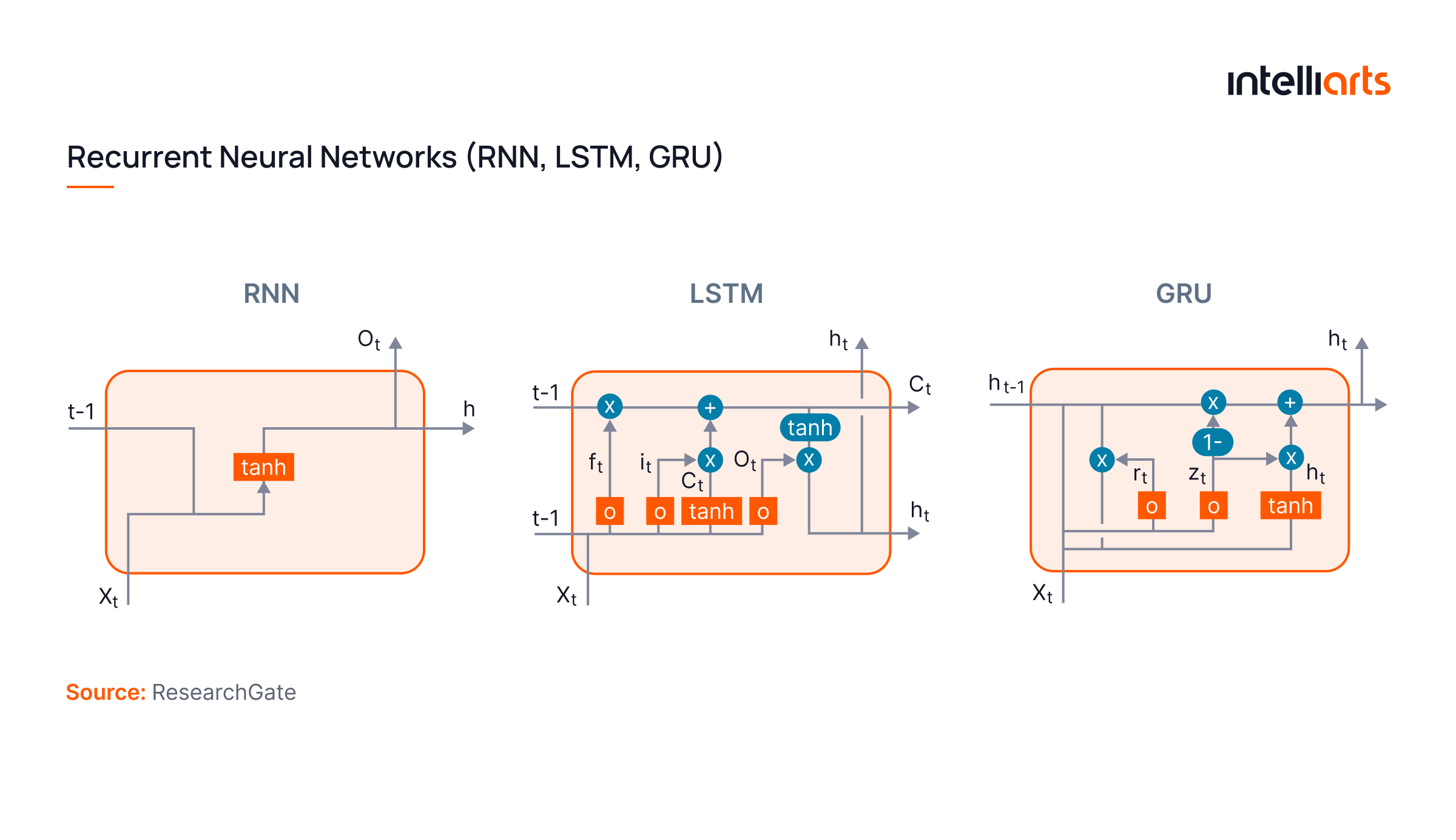

Example 1: Recurrent Neural Networks (RNN, LSTM, GRU)

How it works: Maintains a memory of past inputs via hidden states:

Strengths:

- Learns long-term dependencies

- Suited for sequential, time-ordered data

- No need for manual features

Weaknesses:

- High training cost

- Needs lots of data

- Difficult to interpret

Best for: IoT data, load forecasting, traffic predictions

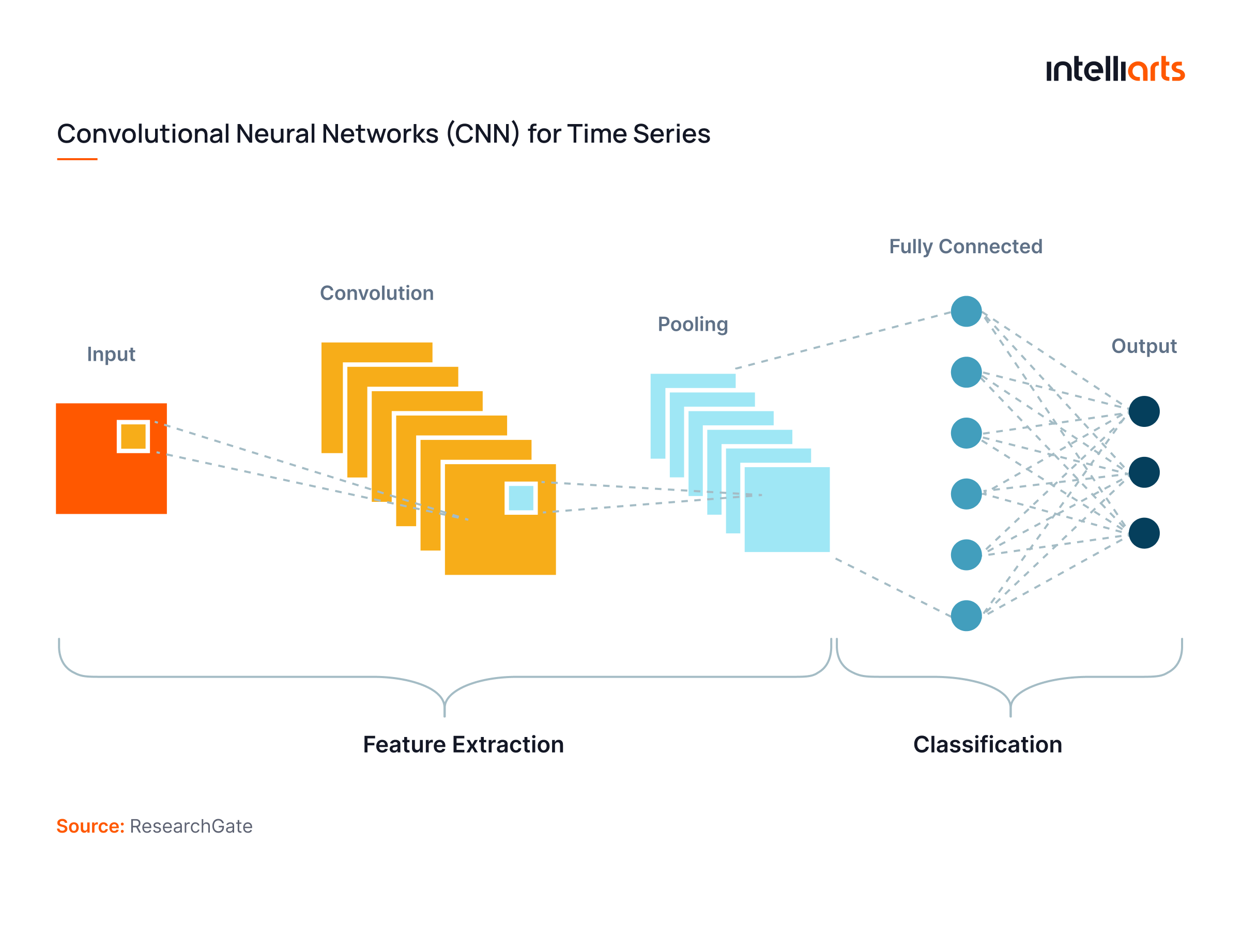

Example 2: Convolutional Neural Networks (CNN for Time Series)

How it works: Applies 1D convolutional filters to detect local temporal patterns.

Strengths:

- Fast to train

- Effective for short-term dependencies

- Good at capturing local patterns

Weaknesses:

- Limited in modeling long sequences

- Less effective without regular sampling

Best for: Activity recognition, anomaly detection, classification

Explore the data science service line by Intelliarts at our respective service page.

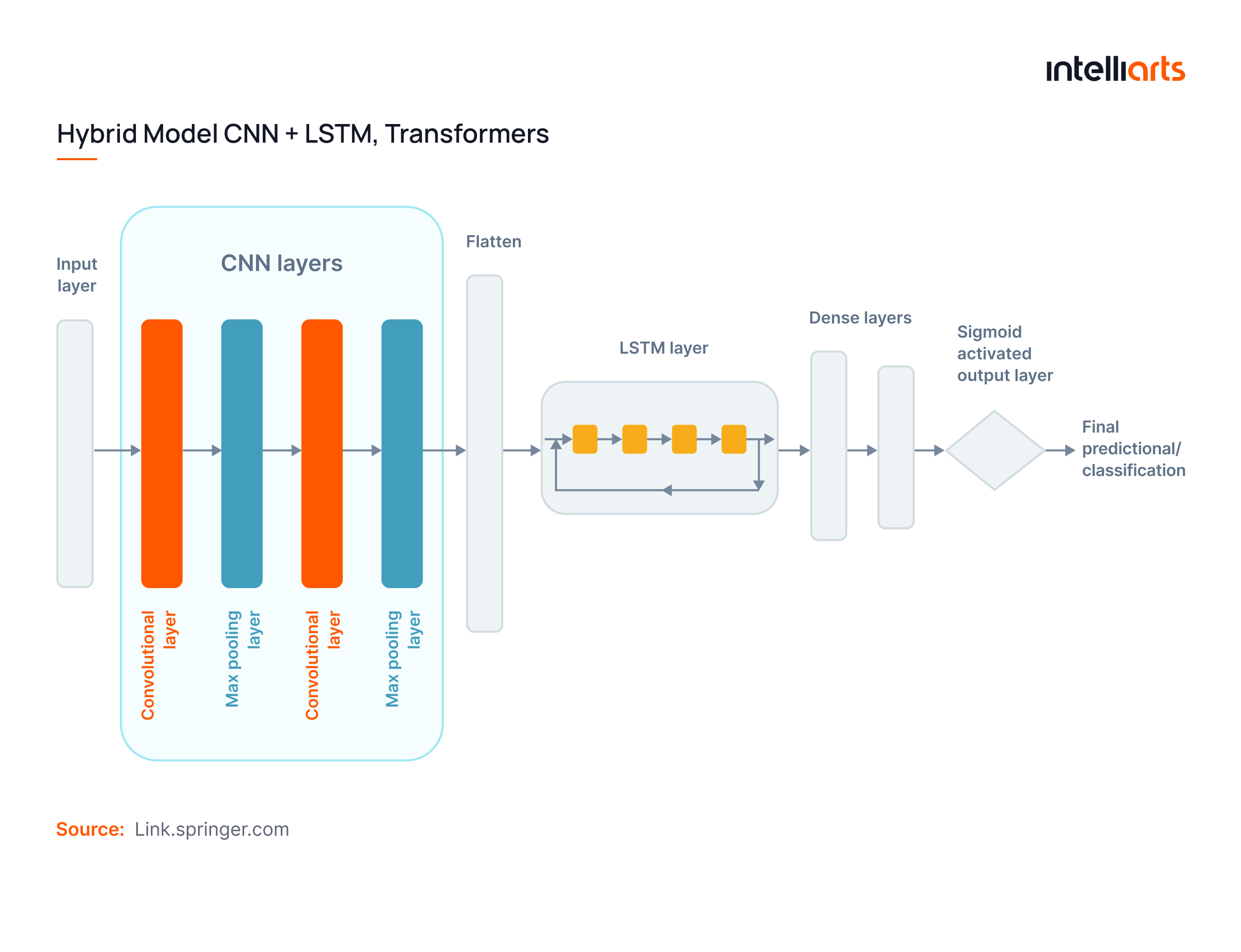

#3 Hybrid & Automated ML solutions

Hybrid models combine strengths from different architectures (e.g., CNNs for local patterns and LSTMs or Transformers for sequence memory), while AutoML platforms automate key ML processes like feature selection, hyperparameter tuning, and model evaluation.

Example: Hybrid Models (e.g., CNN + LSTM, Transformers)

How it works: CNN layers capture local features while LSTM or Transformer layers learn sequential dependencies.

Strengths:

- High forecasting accuracy

- Learns both short- and long-term patterns

- Scales to large datasets

Weaknesses:

- Requires high compute

- Can be opaque

- Complex to deploy

Best for: Financial forecasting, multivariate forecasting, real-time analytics

#4 Unsupervised Learning methods

Unsupervised models detect hidden patterns in time series without needing labeled output data. They are commonly used for anomaly detection, clustering, and pattern segmentation. Techniques include autoencoders, dimensionality reduction (PCA), and clustering algorithms like DBSCAN or k-Means.

Example: Autoencoders / Clustering (e.g., DBSCAN, k-Means)

How it works: Autoencoders reconstruct inputs, while a high reconstruction error indicates anomalies.

Strengths:

- No labels needed

- Detects anomalies or pattern shifts

- Useful for exploratory tasks

Weaknesses:

- Doesn’t forecast

- Requires careful tuning

- Results may be hard to interpret

Best for: Fraud detection, sensor drift, customer segmentation

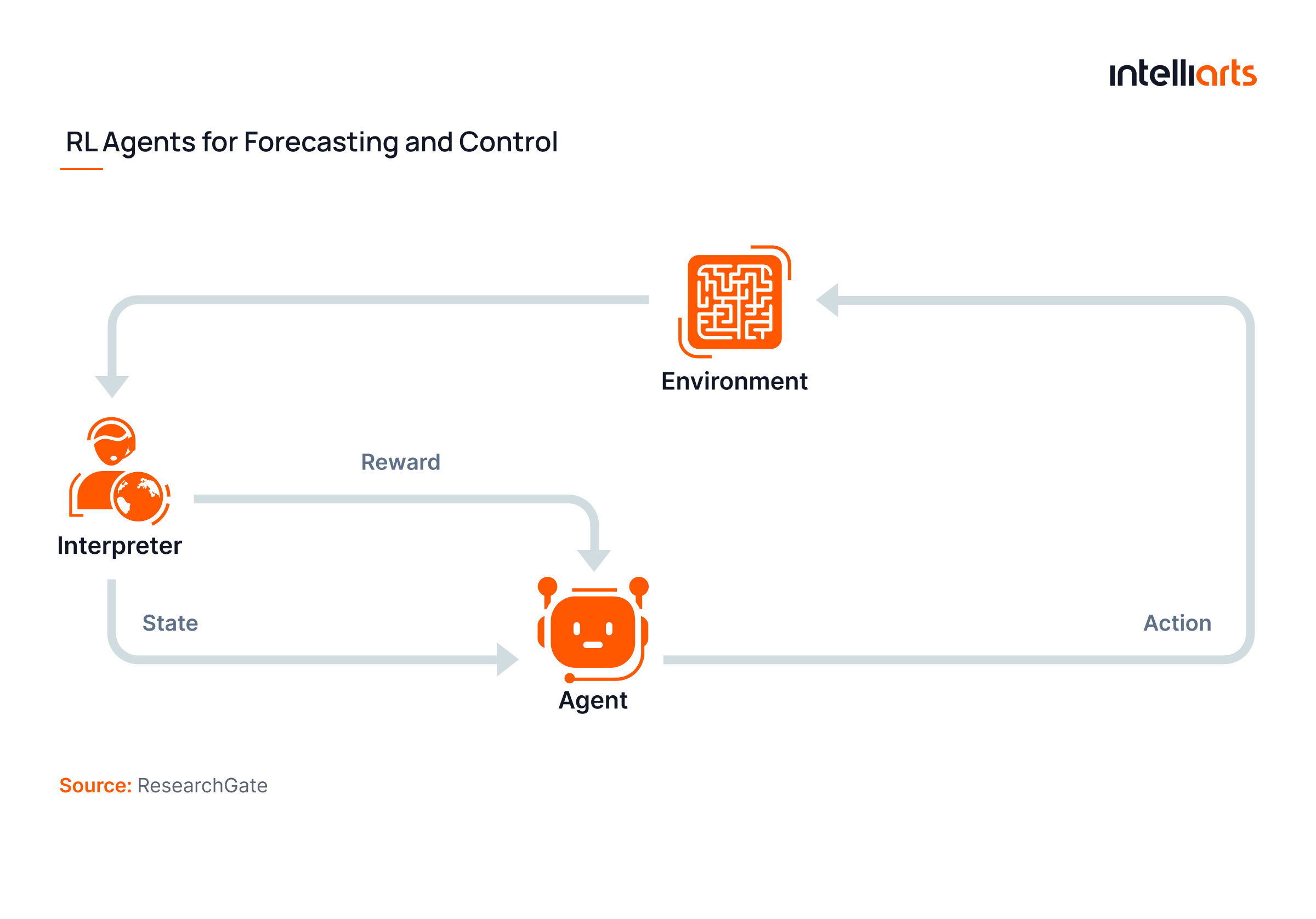

#5 Reinforcement Learning for time series

Reinforcement Learning (RL) is used when decisions must be made over time with feedback, such as in inventory control or trading systems. RL agents learn policies by interacting with their environment, optimizing for cumulative rewards. Though complex, RL introduces adaptability and dynamic strategy formation into time series modeling.

Example: RL Agents for Forecasting and Control

How it works: Learns optimal actions based on rewards:

Strengths:

- Learns dynamic, adaptive strategies

- Optimizes for long-term outcomes

- Useful where feedback is delayed

Weaknesses:

- Complex to train

- Needs simulation environments

- High data and computing needs

Best for: Trading bots, pricing engines, supply chain control

The goal of forecasting is not to predict the future but to tell you what you need to know to take meaningful action in the present.— Paul Saffo, a Technology Forecaster

Note. Out of the listed approaches, supervised models, deep learning approaches, and hybrid and automated solutions are the ones specifically intended for time series machine learning. Unsupervised learning and reinforcement learning are also completely applicable, but keep in mind that unsupervised methods are not forecasting but rather anomaly detection tools, while reinforcement learning is intended for sequential decision-making rather than standard forecasting.

How to choose the right time series machine learning approach?

Let’s explore how to choose one or another machine learning time series model based on different factors:

#1 Business goals

- Forecasting: Use Supervised Learning, Deep Learning, or Hybrid Models to predict future values like sales, demand, or load.

- Anomaly detection: Use Autoencoders or Clustering Methods to detect unexpected spikes, drops, or unusual behavior.

- Classification: Use CNNs, RNNs, or Tree-based Models to label sequences such as fault types, customer behavior, or device states.

- Decision-making: Use Reinforcement Learning to optimize sequential strategies in areas like trading, inventory, or pricing.

Explore the ML-powered error detection success story by Intelliarts to see how we used gradient boosting techniques to implement the ML system for error detection in fleets

#2 Data size considerations

- Small datasets: Use Tree-based Models or Probabilistic Approaches for stable performance with limited data.

- Large datasets: Use Deep Learning or Hybrid Models to capture complex patterns and scale across variables.

#3 Data frequency considerations

- Low-frequency data: Use Simpler Models like XGBoost or Regression to model weekly or monthly trends.

- High-frequency data: Use LSTM, CNNs, or Transformers to handle second-by-second or minute-level inputs.

#4 Data quality considerations

- Univariate series: Use ARIMA, Exponential Smoothing, or Tree-based Models for single-variable trends.

- Multivariate series: Use LSTM, XGBoost, or Transformer-based Models to incorporate multiple influencing variables.

#5 Time Series structure

- Univariate series: Use ARIMA, Exponential Smoothing, or Tree-based Models for single-variable trends.

- Multivariate series: Use LSTM, XGBoost, or Transformer-based Models to incorporate multiple influencing variables.

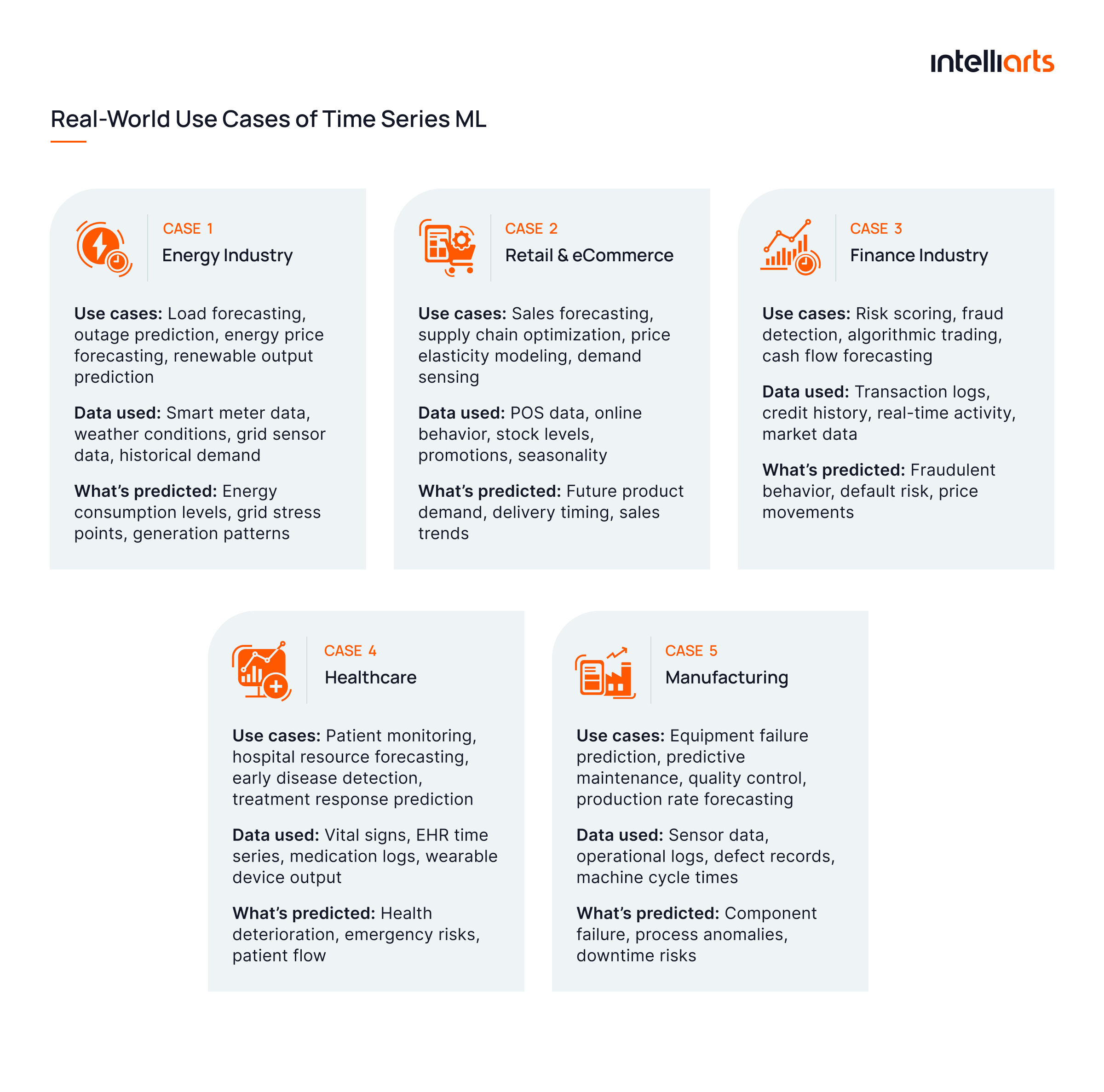

Real-world use cases of time series ML

The typical use cases of time series machine learning models were briefly mentioned in the sections above. However, the time is just right to tailor them to real-world use cases from several business domains:

#1 Energy Industry

Use cases: Load forecasting, outage prediction, energy price forecasting, renewable output prediction

Data used: Smart meter data, weather conditions, grid sensor data, historical demand

What’s predicted: Energy consumption levels, grid stress points, generation patterns

Business benefits:

- Balance supply and demand efficiently

- Reduce operational costs and prevent blackouts

- Support renewable energy integration

#2 Retail & eCommerce

Use cases: Sales forecasting, supply chain optimization, price elasticity modeling, demand sensing

Data used: POS data, online behavior, stock levels, promotions, seasonality

What’s predicted: Future product demand, delivery timing, sales trends

Business benefits:

- Prevent overstock or stockouts

- Boost campaign ROI through accurate timing

- Improve inventory turnover

#3 Finance Industry

Use cases: Risk scoring, fraud detection, algorithmic trading, cash flow forecasting

Data used: Transaction logs, credit history, real-time activity, market data

What’s predicted: Fraudulent behavior, default risk, price movements

Business benefits:

- Detect fraud early

- Automate risk management

- Enhance credit decision accuracy

#4 Healthcare

Use cases: Patient monitoring, hospital resource forecasting, early disease detection, treatment response prediction

Data used: Vital signs, EHR time series, medication logs, wearable device output

What’s predicted: Health deterioration, emergency risks, patient flow

Business benefits:

- Enable proactive care

- Reduce emergency visits

- Optimize resource allocation

#5 Manufacturing

Use cases: Equipment failure prediction, predictive maintenance, quality control, production rate forecasting

Data used: Sensor data, operational logs, defect records, machine cycle times

What’s predicted: Component failure, process anomalies, downtime risks

Business benefits:

- Minimize unplanned downtime

- Cut maintenance costs

- Improve product consistency

As you can conclude, ways to apply time series forecasting machine learning are many, and most businesses can opt to benefit from them. However, it’s worth mentioning that time series machine learning development and integration in any digital system a company may have in place require substantial expertise and experience. Therefore, it should be entrusted to qualified ML software engineering agencies only.

Explore time series data examples in another blog post written by Intelliarts experts.

Best practices for implementing time series ML

Finally, let’s proceed with best practices and tips on developing and implementing a time-series ML solution. To provide the most value for different groups of readers, we split our recommendations into business-focused and engineer-focused sections:

#1 Tips for businesses:

- Define the business objective clearly. Identify whether your goal is forecasting, anomaly detection, classification, or strategic decision-making. Communicate your need to your software provider accordingly.

- Align expected ML output with actual business processes. Forecasts must integrate into operational workflows (e.g., dashboards, APIs, alerts). Without actionability, accurate predictions offer limited value.

- Start small, then scale. Run a pilot on a specific product line, process, or segment before full deployment. Use early results to build internal trust and refine processes.

- Understand data readiness. Ensure historical data is available, complete, and properly timestamped. Poor data quality can delay or distort implementation. Don’t forget the Garbage In Garbage Out (GIGO) rule, which emphasizes the importance of supplying ML with high-quality data only.

#2 Tips for engineers:

In the feature engineering stage, make sure to design features that capture time dynamics, which implies enhancing your dataset with time-relevant variables:

- Lag features (e.g., yt−1,yt−2y_{t-1}, y_{t-2}yt−1,yt−2)

- Rolling statistics (e.g., 7-day mean, rolling std)

- Calendar/time indicators (e.g., month, holidays, hour)

- Exogenous variables (e.g., weather, promotions)

Ensure no future data leaks into training by following these tips:

-

- Never use future labels or future-derived features

- Apply preprocessing (scaling, encoding) only on the training data before applying to the test sets

- Watch for hidden leakage in joins or feature aggregation

Preserve temporal order when evaluating models by following these practices:

- Split data chronologically, never randomly

- Use rolling windows or expanding window validation

- Always test on future data to simulate real-world performance

As extra advice, we highly recommend leveraging AutoML solutions like Google AutoML when resources such as time or knowledge are limited. Still, validate outputs manually before deploying.

Final take

Machine learning for time series overcomes the limitations of traditional statistical models by handling non-linear, multivariate, and large-scale data. It powers accurate forecasting, anomaly detection, and decision-making across industries like energy, retail, finance, and healthcare. With careful data preparation, time-aware validation, and continuous monitoring, engineers can offer businesses high-impact solutions that turn complex time series data into actionable insights.

Should you need assistance with time series machine learning development, don’t hesitate to reach out to Intelliarts. We’ve been in the software market for more than 25 years, delivering high-end solutions and tailored services. With over 90% of recurring customers and 54% of senior staff engineers, we are ready, willing, and able to tackle a project of any complexity at your request.

FAQ

Which machine learning model works best for time series forecasting?

The best time series machine learning models depend on the use case. For complex patterns, models like XGBoost, LSTM, and Prophet perform well. LSTM handles long-term dependencies effectively, while XGBoost works well with structured time series data and engineered features.

How long does it take to implement a machine learning solution for time series?

Time series machine learning development typically takes 4–12 weeks for a smaller-scale solution or an extension to an existing software. Duration varies based on data availability, model complexity, and integration needs. Custom feature engineering and testing for time series forecasting machine learning add to the timeline.

Can machine learning models be integrated into our existing business systems?

Yes, machine learning time series solutions can be integrated into ERP, CRM, or BI systems through APIs or scheduled batch jobs. Most platforms support deploying time series models in machine learning for real-time or batch forecasting.