Solution Highlights

- Tested the hypothesis of implementing the AI assistant for practical assignments

- Researched and consulted on the best way to build the AI agent by combining classical ML and LLM with programmatic algorithms

- Effectively built the PoC with a UI and basic functionality required

- Created the solution to stop users from dropping out of the course after they got stuck

About the Project

Customer:

Our partner (under NDA) offers cybersecurity training with browser-based learning content for all skill levels. Their value proposition includes enabling users to launch virtual machines to conduct simulated hacker attacks directly from the platform, which allows getting practical experience while also skipping the hassle of downloading and configuring.

Challenges & Project Goals:

As the company specializes in education in the cybersecurity domain, the customer wanted to create an AI agent, a so-called step suggester, that should analyze the student’s work in the virtual environment and give recommendations on what to do next. The idea was to make the practical assignments more efficient and motivate students not to drop out of the online course even if they “got stuck”.

The company reached out to Intelliarts to research this recommendation system and the best approach for AI student assistant development and implementation, as well as to answer whether it was possible to solve the business challenge that the company wanted to address with this AI agent.

For a comprehensive comparison of AI models like Claude and ChatGPT, which can be utilized in similar educational tools, refer to our article on Claude vs. ChatGPT.

Solution:

During the discovery phase, the Intelliarts team proved the possibility and feasibility of building the AI agent that our partner intended to create. We analyzed the available data and recommended how to collect more data to enhance future results. The team then researched and consulted the company on the best way to build this AI assistant for students by combining a classical machine learning (ML) approach and a large language model (LLM) with an algorithmic programmatic method to detect straightforward hacker attacks.

The problem analysis also showed that the main challenge for our customer was to improve the retention rate. With this information, we also advised on how to rewrite learning materials and build tasks to keep users focused and interested.

ML Development, Data Analysis, Cloud Services, Big Data, Data Science

Technology Solution

Machine learning development begins with hypothesis testing. If the initial hypothesis is validated, the ML team proceeds to develop an ML solution. Alternatively, if the hypothesis is not confirmed, a new hypothesis is built for further testing. Respectively, in this project, our data scientists first performed data analysis to determine the feasibility of creating the step suggester.

It turned out that the customer had enough data only for the MVP on a scale of several learning challenges. That’s why we created recommendations on what data to gather and how to approach data collection to improve future results.

We also understood that the customer wasn’t sure what the final solution should look like (whether they should be an AI assistant for students or ML models). After a series of discussions, we offered to create a mix of an AI assistant with algorithms. The essence was that when a user passed the course and got stuck, the system would analyze at what stage that happened and give recommendations for the next steps to continue studying.

During the practical assignments, a user works with different cybersecurity tactics and techniques, including phishing, email collection, impersonation, etc., etc. Some of these approaches are more trivial so they can be described with programming. Others are more complicated and require ML. That’s why we decided to partly describe the solution with code and partly train ML models. ML still works with probabilities, which means it was more reliable to use programming for simpler tasks whenever possible.

Besides, since the problem analysis proved retention rate as the main challenge for our partner, we used this information to give specific recommendations on how to improve learning materials and build tasks to promote users’ interest.

We also calculated the costs of the solution to see that it fits into the customer’s budget as there were some worries that the LLM might be too expensive in this case.

Now let’s move to how our data scientists built the AI tool for students:

- Basically, a user has to solve 10 problems to pass one assignment. The research showed that they could solve five, for example, and had no idea what to do next. This was actually one of the first insights that we provided to the customer — that the user might need help like an instruction or a plan while solving a problem.

- Together, we decided to build our strategy based on this insight: to structure and describe the steps that the user was taking while completing the assignment to be able to analyze them when the user was stuck again and give recommendations to the user.

- Based on the log data, our data scientists also concluded that each of the problems had their own algorithm. So we started to build these algorithms by working side by side with a subject-matter expert (SME), i.e., the person who developed this course and a cybersecurity specialist.

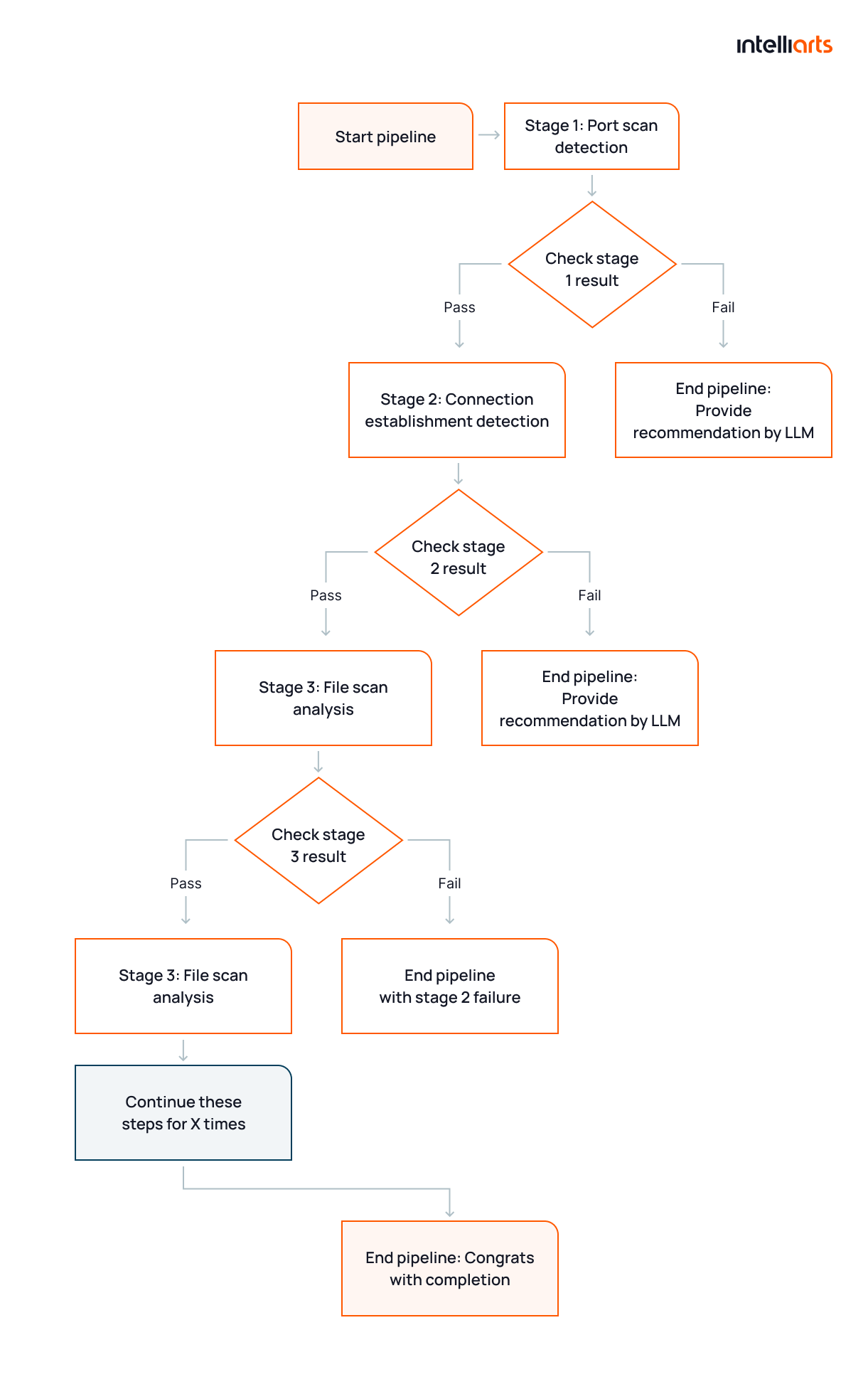

- As a result, for each problem, we developed an algorithm plus an LLM. The latter also helped to classify user’s actions, plus being responsible for communication with a user. Our data scientists combined all this into one linear pipeline and received the solution architecture with 10 steps plus an algorithm and the recommendation of the LLM for each step.

- Accordingly, when we ran the pipeline, we analyzed the data and checked whether certain conditions of the algorithm were met. If yes, we moved on to checking the next step. If the conditions were not met, we understood that this was the place where the user got stuck. Using the LLM, we then gave a useful output to the user using the information from the steps that they went through and giving a recommendation for what to do next.

Our team completed the discovery phase and built the PoC with a UI and basic functionality required by the customer to perform one task out of 700 planned. This solution was for demonstration purposes only and worked on the engineer’s machine. Still, it helped to test the hypothesis and prove that the AI agent can be implemented as planned. Now we’re assessing the scope for the remaining 700 tasks before we move on to the solution implementation.

Moreover, several factors made this project more challenging but interesting as well:

- Large volumes of data: First, our partner didn’t know what data they tracked and required for the project. After we helped them understand the data and made recommendations on what data to track, the company realized they produced gigabytes of data in half a day.

- Real-time work: The solution had to work in real-time, which complicated the task. We needed to think over what technologies to use so it was possible to build the full-scale solution later.

- Limited domain knowledge: It mattered a lot how quickly and effectively the team would work with the SME since we had a limited knowledge of the cybersecurity domain. But our collaboration was fruitful, and, for instance, thanks to it, we understood that the customer needed to structure those steps and how to describe them better. All in all, our data scientists managed to understand the domain and the specific tasks that our partner worked with.

Business Outcomes

Earlier, the company experienced a high customer turnover as users could drop out of the course after they got stuck on the practical assignments and had no idea how to continue. With the step suggester, the user is expected to finish the course and, hence, should theoretically be more satisfied and motivated to try another one. Overall, our partner has a range of long-term expectations towards the AI agent, including the ability to retain customers more efficiently and make the process of learning easier and faster for users.

Even though the solution is still to be implemented, the Intelliarts team proved the feasibility of the AI agent. We also provided specific recommendations on how to create the AI assistant for students in the most efficient manner. For example, we advised the on data pipeline and data required to collect for model enhancement.