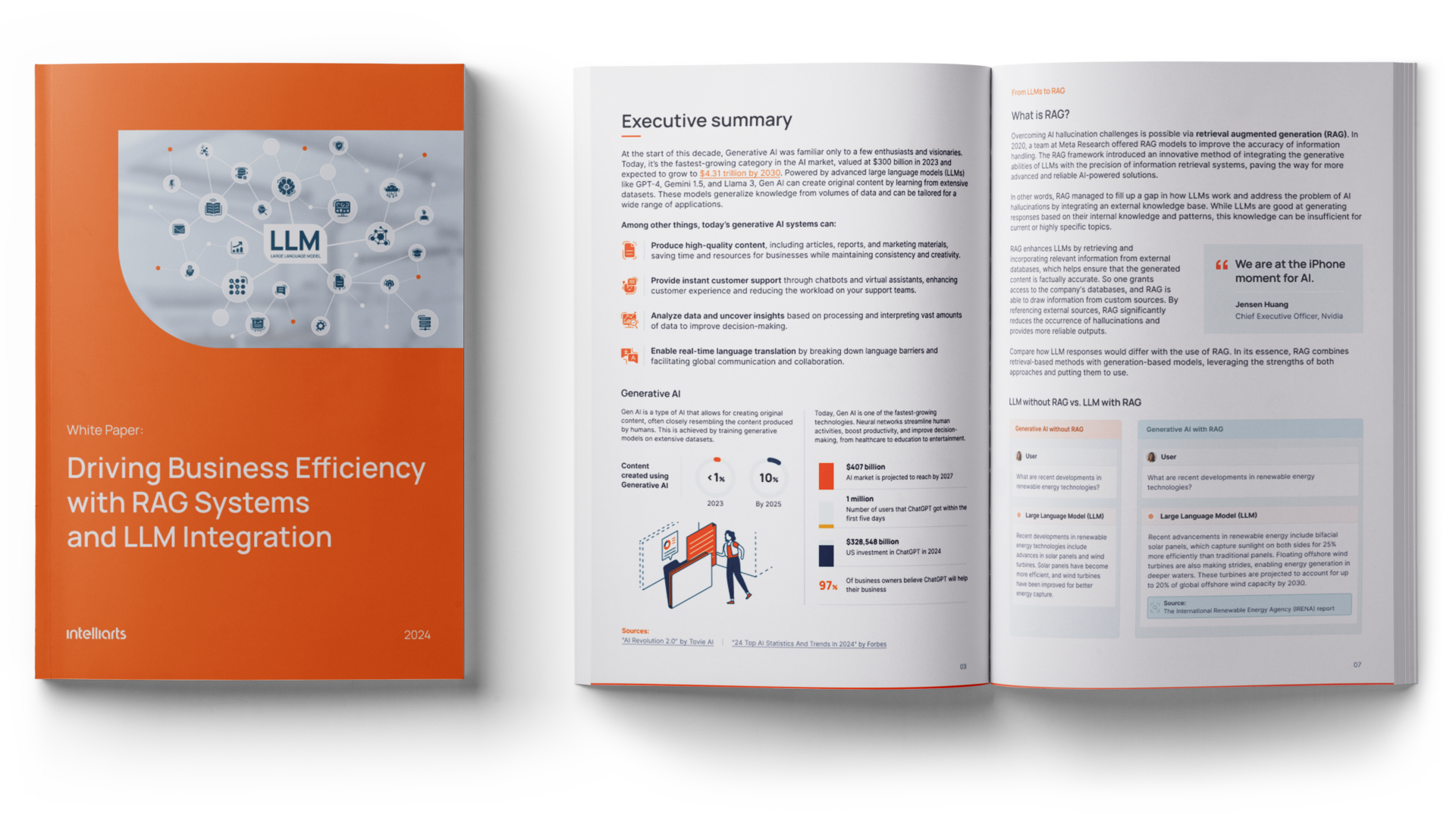

White Paper: Driving Efficiency with RAG & LLMs

Looking to improve the accuracy of your AI chatbot by 40%? The Retrieval Augmented Generation (RAG) models can significantly enhance your LLM performance, helping deliver more accurate, contextually relevant responses.

With extensive hands-on experience in RAG systems and a team where 40% of engineers are senior-level experts, our ML engineers share insights and top use cases of RAG framework in this RAG white paper. Here you’ll learn about:

- The RAG approach and how it works

- The value RAG brings to businesses

- 10 practical RAG use cases

- Implementation tips for RAG models for LLM

By integrating retrieval augmented generation into your LLM system, you can combine LLMs’ generation capabilities with advanced retrieval mechanisms to create more intelligent, context-aware systems that drive business success.

FAQ

How long does implementing an RAG system with existing LLM infrastructure take?

Implementing an RAG system with existing LLM infrastructure can take from several weeks to a few months, depending on the complexity of the setup and data sources. With a well-defined project scope and a skilled team like Intelliarts, businesses can accelerate the integration process.

What specific business areas will benefit most from RAG-powered LLMs?

RAG-powered LLMs provide significant benefits across different business areas, including customer service, content generation, information retrieval, market research and analysis, language translation, and more. In our white paper on RAG systems for LLM, we discuss 10 RAG use cases, backed by real-world examples.

What support does Intelliarts provide for implementing RAG in our existing workflows?

Intelliarts provides end-to-end support for integrating RAG into existing workflows, from initial planning to system design to deployment and maintenance. Our team also offers tailored guidance on best practices, data integration, performance optimization, and comprehensive LLM development services to ensure a smooth and effective RAG implementation.